This article summarized my time-saving Linux tips, mostly with CentOS environment.

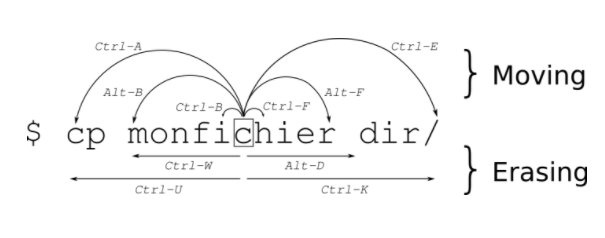

Bash Shortcuts

These shortcuts save a lot of arrow keystrokes. Some of them may require some tweaking to work on MacOS.

| ctrl – w | delete word |

| ctrl – a | cursor to end of line |

| ctrl – e | cursor to beginning of line |

| ctrl – k | delete to end of line |

| ctrl – u | delete to beginning of line |

| ctrl – l | clear screen |

| cd – | change to previous directory |

| alt – b | move cursor back by a word |

| alt – f | move cursor forward by a word |

| alt – . | type last parameter of previous command |

Job Control

We need to first understand the following job states:

| Actively running | Displayed in Active Session | |

| foreground job | Yes | Yes |

| suspended job | No | No |

| background job | Yes | No |

These states can be managed by the following shortcut keys:

| ctrl – z | send active job to suspended |

| ctrl – c | send SIGINT to active job to kill it |

| jobs | list jobs with id |

| bg | bring a job to background. the current shell still “owns” the job |

| fg | bring a job to foreground (by id) |

| disown | remove job from current shell’s job table. the job is still running and can be found by ps command |

| kill | kill a job by id. the job is no longer running and won’t be found by ps command |

- Note 1: running a command with ampersand(&) at the end starts the process and pushes it to the background, so you can continue typing;

- Note 2: an example is to start vim with a file, ctrl-z to push to background, jobs to view, fg + job id to bring it back

Share Screen

Different persons may share screen on Linux shell and interact with each other. To do so, everyone need to log on the same server as the same linux user. Then the first person runs:

screen -S screen_name

Then the second (and third, etc) person runs:

screen -x screen_name

Now everyone can collaborate by seeing what each other is doing and type at the same time.

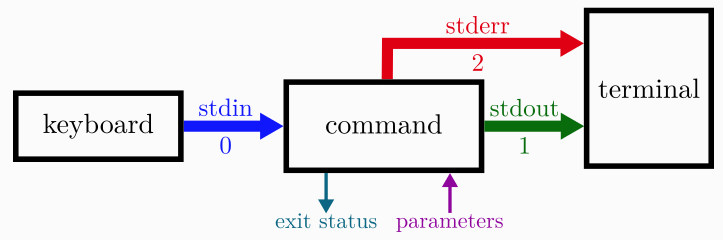

I/O redirection

| file descriptor | input or output expression | ||

| stdin | 0 | to read file: 0< or < for short to read a single line: <<< to read multiple lines: << | |

| stdout | 1 | to overwrite: 1> or > for short to append: 1>> or >> for short | |

| stderr | 2 | to overwrite: 2> to append: 2>> |

For example, here is how you display all lines between “ANYWORD” (aka “here document”):

cat <<ANYWORD

paragraph

after

paragraph

ANDWORDIn addition, to redirect between commands, pipe (|) is used. You may also redirect stdout into stderr or the other way round. Below are several examples:

| command1 | command2 | redirect stdout of command1 to stdin of command2 |

| command3 > /dev/null | get rid of stdout from command3 |

| command4 2> file1 | redirect stderr of command4 to file1 |

| command5 | tee file2 | redirect stdout of command5 to stdin for tee command, which display stdout and write to file2 at the same time |

| command6 2>&1 | redirect command6’s stderr into stdout. |

| command7 1>&2 | redirect command7’s stdout into stderr |

Note: in the last two examples, the ampersand just indicates the following number is file descriptor instead of file name.

For example, in the first command below, stderr is redirected to stdout, and stdout goes to file. The second and third commands are just two forms of shortcut for the first:

command > file 2>&1

command >& file

command &> fileHere is an advanced example to compare two files from two different servers:

diff <(ssh user@host1 'cat /tmp/file1.txt') <(ssh user@host2 'cat /tmp/file2.txt')

With the knowledge of I/O redirection, let’s compare the efficiency of the following two commands in dealing with a huge file:

- cat huge.file | mycmd

- mycmd < huge.file

In the first command, cat first causes an I/O read of huge.file, then stdout gets written into pipe buffer. Lastly, the mycmd reads it from its stdin. This is an example of inefficiency.

In the second command, huge.file is provided as stdin to my cmd. Only one read is involved as compared with two read and one write in the previous command. The second command is expected to be three times as fast as the first.

Here is a diagram to illustrate command and I/O redirection:

Check out the page for more scenarios.

Operators

First, let’s introduce some special shell variable and operators:

| IFS | A special variable indicating internal field separator |

| ; | The ; token just separates commands to run. Use it when you’d like to combine multiple lines of commands into a single line |

| && | Logical AND operator. When you run command1 && command2, command2 ONLY runs if command1 returns true (success, or exit status = 0). |

| || | Logical OR operator. When you run command1 && command2, command2 ONLY runs if command1 does not return false (fail, or exit status != 0) |

| {} | command combination operator. e.g. {command1;command2} |

| () | precedence operator |

Note the use of && and || are fairly common. && is used when you want to use the first command to provide a good status for the second. || is used when you want to report error about the first command. For example:

test -d "/tmp/newdir" || mkdir -p "/tmp/newdir"

test -f "/var/run/app/app.pid" || echo >&2 "ERROR: cannot detect pid"Attributes

I’ve been using chown and chmod to manipulate owners and file permissions against owners, groups and other users. I recently realized another command chattr (change attribute) which controls file attributes, regardless of users or groups. In other words, if you change the attribute of a file to immutable, then even the owner isn’t able to change it. Its manual has full list of attributes, but common ones are:

- a -> Append only

- i -> Immutable

- c -> File automatically compressed in kernel.

For example, the append only attribute is commonly used for log file to prevent any other users from modifying the file. Sometimes we want to keep system files from being changed as well:

chattr -i /etc/resolv.conf

echo "nameserver 8.8.8.8" > /etc/resolv.conf

chattr +i /etc/resolv.confWhatever attribute that we set with chattr, we can use lsattr to list them. These two commands are in Linux not BSD.

Loop

If you can tell how many iterations, then use for loop. For example, you want to execute a command for each file in a directory:

for file in /tmp/*.hl7

do

echo "Picking File $file" >> output.txt

hl7snd -f "$file" -d destionatiohost:2398 >> output.txt

sleep 2

done

or you may specify range and steps for a for loop:

for i in {1..11..2} {13..23..2}

do

echo "hostmachine"$i

scp local.file "hostmachine"$i:/tmp/

done

Note that the way we use step here is only available on Bash 4.x (check version through variable $BASH_VERSION, reference)

If it’s hard to tell how many iterations, then use while loop. Here are some examples

Example 1. You want to execute the same command multiple times, each with a line in a file as parameter:

while IFS= read -r var

do

echo $(date +%Y%m%d:%H%M%S) displaying line "$var" >> resend.log

sleep 2

done < list.txt

Example 2. One step further, if the input file is a CSV format like this:

acc1,pid1

acc2,pid2

acc3,pid3And you want to repeat a command multiple times, each execution with a column 1 as parameter 1, column 2 as parameter 2, etc. Then you can run something like this:

IFS=,$(echo -en "\r\n\b"); while read -r f1 f2;

do

echo processing $f1, $f2

curl -i -X PUT -H "Content-Type:application/json" -d '{"userId":"dhunch"}' --url http://localhost:9876/monitoring/document/$f1/$f2/publish;

done < input.txt >>out.txt

In this example IFS assignment is done before while loop. So it will take effect outside of while loop. This is why \r, \n and \b are specified in addition to comma. If this is not something you like, you may put IFS assignment after while and only specify comma as field separator.

Example 3. If you just want to repeat the same command every 5 seconds, apart from watch command, you can leverage while loop doing something like this:

while true; do df -Ph | grep "sda1"; sleep 10; done

In fact in all these examples while loop can be expressed in a single line, with semicolon to indicate where you would have typed enter.

find, with xargs and -exec {}

The find command offers flexibility searching files with certain conditions.

Example 1. Find files from within last 5 * 24 hours in current directory:

find -maxdepth 1 -type f -mtime -5

Example 2. Find tar files older than 6 * 24 hours:

find . -type f -name "*.tar" -mtime +6

Notes:

- the switch -iname is similiar to -name but case insensitive

- the switch -mtime goes by modified datetime; -atime goes by access time

- the switch -mmin measures in minutes

- the swtich -daystart makes it measure time from the beginning of current day (instead of 24 hours from current time of current day)

Here are more information about find command, and more examples.

To execute command per result from find, we have the options of xargs and -exec {}. Both build command based on parameter input, instead of I/O redirection. xargs is considered more efficient and it also works with commands other than find. -exec {} only works with find command.

Here’s a basic example of -exec {}

find . -iname '*.dcm' -exec dcm2txt {} \;

On the other hand, xargs works with any command followed by a pipe. It takes input from previous command, split it by space or carriage return into a list, then build command with each item on the list. To help understand parameter passing with xargs, we examine two examples. The first example is a directory with three files in it: x.a, y.a, z.a

$ ls

x.a x.b x.c

If we want to prefix each file with pre, we can do the following:

ls | xargs -I aa echo "mv aa prefix_aa"

In the second example, suppose find command produces the following result:

firstdir

seconddirCompare the following two commands:

find . -type d | xargs ls -l

find . -type d | xargs -n 1 ls -l

In the first command, xargs invokes “ls -l firstdir seconddir” whereas in the second command, xargs invokes “ls -l firstdir” and then “ls -l seconddir”. The first command requires that the utility takes multiple parameters (in this case, ls does. Other commands such as wc, grep also do). The second command is particular helpful when the utility only takes one parameter. This is because the switch -n sets the maximum number of arguments taken from standard input for each invocation of utility.

Last but not least, operators are quite useful in Bash scripting. Here is a good reference.