As applications are moved to the cloud, the application workload hosted on virtual machines need to interact with cloud resources. For this, we need an IAM solution with two mechanisms:

- a (non-human) identity in the cloud service platform (CSP), to represent the application;

- a way to grant permission to this identity, so it can manage resources

CSPs such as Azure and AWS have their own implementations of the two mechanism. In Azure, we have Entra workload identity (including service principal and managed identity) for the first mechanism, and Azure roles for the second. On AWS, they are the identity pool capability of Amazon Cognito and IAM role. Next, what about the workload on managed Kubernetes service? Essentially, we will need to more mechanisms:

- a native Kubernetes identity to represent the workload (Pod);

- a way to map the Kubernetes identity to the identity in CSP

Kubernetes Service Account is designed for the first item. The second mechanism is for the CSP to address. In this post, let’s examine this in Azure. Specifically, how does Azure manage workload identity with Azure Kubernetes Service (AKS).

Node Identity and Cluster Identity in AKS

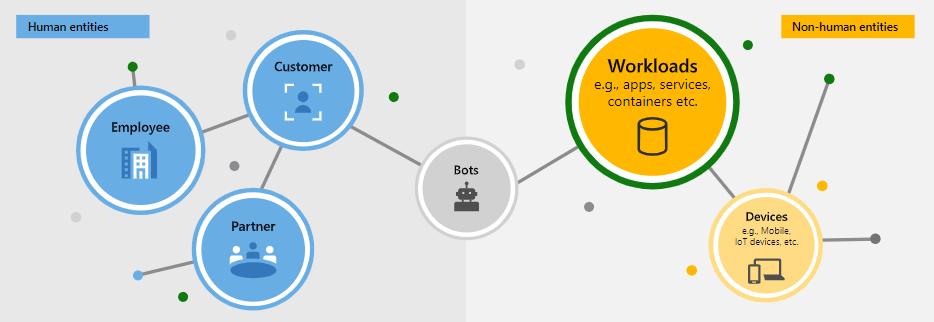

Let’s define what exactly is a workload identity. In Azure we think of it as one type of non-human identity. In our context, workload identity in the broader sense contains:

- the identity that represents the control plane (or the whole cluster)

- the identity that represents the node (or kubelet process)

- the identity that represents the application in a Pod (workload identity in the narrow sense);

So it is important not to confuse these identities. In this section, I’ll focus on 1 and 2 since they are part of workload identity in the broad sense. In the rest of the sections, I’ll discuss 3, and use the narrow sense of workload identity.

When we create an AKS cluster, we create both a cluster control plane and a node pool. Both the control plane and the nodes need to provision cloud resources using Cloud API from Azure. For example, if we use Terraform’s AzureRM provider to create an azurerm_kubernetes_cluster resource, then we specify the cluster’s identity using service_principal or identity block. We specify the nodes’ identity using the kubelet_identity block, because kubelet is the process that runs on each node.

Even though a cluster builder might be tempted to assign the same identity to both Control plane and kubelet, the security best practice is to keep them separated. It is also the responsibility of the cluster builder to distinguish activities by the control plane and by kubelet process on each node, and attache an Azure Role with minimum privilege to each of the identities.

These two types of identities (control plane and kubelet) are relatively straightforward. In order to use them, we don’t have to play with Kubernetes objects. In the next section, we’ll continue to discuss the identities that represents each Pod in Azure. We now refer to them as workload identities, but the first available technology was pod managed identity.

Pod Managed Identity in AKS

When I first worked on Azure Kubernetes, Pod managed identity was in preview and was the recommendation. However, Microsoft renamed it (to Microsoft Entra pod-managed identities) and then deprecated it after a couple years of preview. As of Oct 2022, the recommended mechanism becomes Microsoft Entra Workload ID. For simplicity, we refer to the deprecated mechanism as “Pod Identity”. We discuss pod identity only for the purpose of understanding why it is no longer recommended and what is missing in it. For new workload deployment, we should always use workload identity.

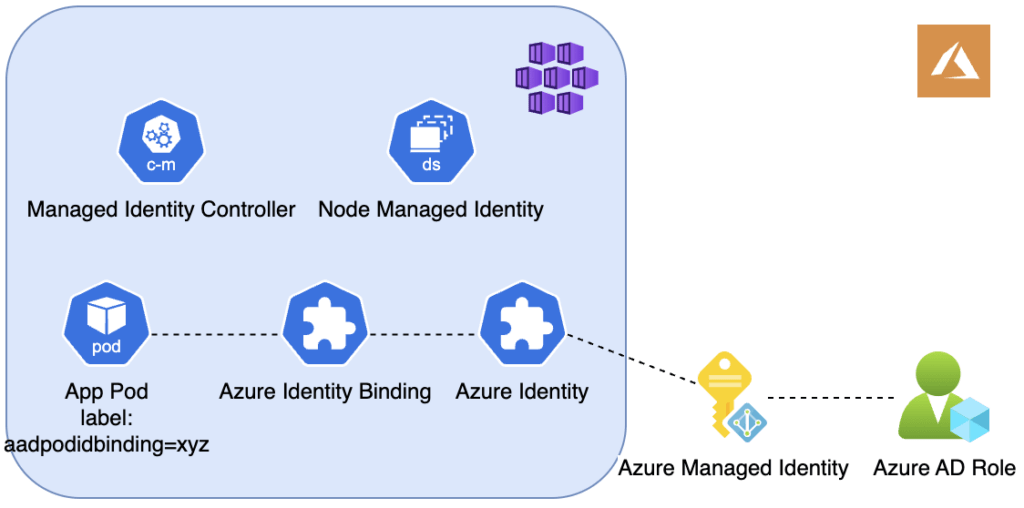

For Pod Identity to work, a feature flag EnablePodIdentityPreview must turn on. Pod Managed Identity operates on a Kubernetes controller called MIC (Managed Identity Controller) and a DaemonSet called NMI (Node Managed identity). You start with an Azure managed identity with appropriate roles. Once you installed Pod Identity, there will be two CRDs AzureIdentity and AzureIdentityBinding. To grant Azure permissions to a Pod, you create a CR for each CRD. The AzureIdentity CR connects to your Azure managed identity. You also create an AzureIdentityBindign CR. When declaring a Pod, you link to AzureIdentityBinding by using the label aadpodidbinding.

There are two problems with pod managed identity. First, there is a vulnerability when it works with kubenet as network plugin. This vulnerability requires an additional mitigation step. Second, it does not make use of Kubernete’s Service Account. Let’s discuss in the next section why it’s favourable to use Kubernetes’ ServiceAccount.

Kubernetes Service Account

In Kubernetes RBAC model, Service Account can bind to Roles to gain access to other Kubernetes resources. The most common use case is allowing the running application in a Pod to access other Kubernetes resources. When it comes to letting an application in Pod access cloud resources in the CSP, it makes sense to use Service Account, for a a consistent pattern.

A service account must carry a token to function. Each namespace has a default service account with the token mounted automatically. Each Pod created in a namespace uses the default service account of the namespace, unless otherwise specified. However, many security organizations do not considered this default behaviour as the best practice. For example, CIS Kubernetes benchmark 1.8 has these two recommendations:

- Ensure that the default service accounts are not actively used (5.1.5)

- Ensure that Service Account Tokens are only mounted where necessary (5.1.6)

In other words, we should create non-default service account with automountServiceAccountToken set to false. Then when declaring a Pod, we explicitly specify the service account and where to grab the token for the service account. One way to pass ServiceAccount token is through volume projection.

To allow a Pod to access Azure resources, we use the combination of Kubernetes Service Account and Microsoft Entra workload identity.

Workload Identity for AKS

Microsoft introduced Entra Workload Identities in late 2022 to address IAM issues around machine identities. It comes with some modern features such as conditional access (e.g. location-based access, anomaly sign-in detection, etc). A workload identity can be:

- application: an abstract entity as the global representation of your application for use across all tenants;

- service principal: the local representation of a global application object in a specific tenants;

- managed identity: a special type of service principal that eliminates the need for developers to manage credentials

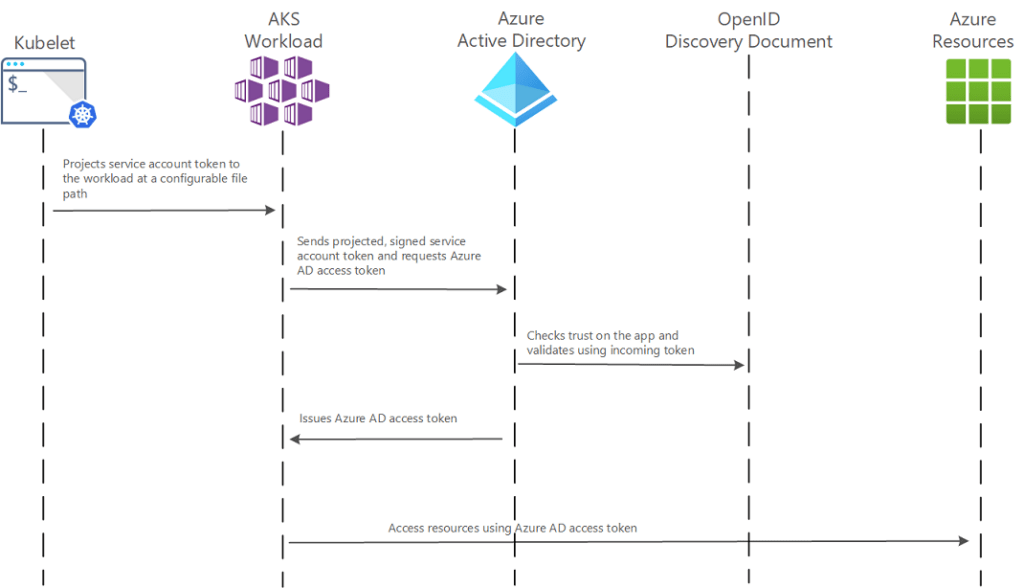

In our use case for AKS workload, we also make use of Azure’s Managed Identity. This part is the same as the pod identity mechanism. However, here we create a federated identity credential for managed identity. The OIDC federated identity credential is issued by the AKS cluster. Within the AKS, the service account references the identity by client_id. Here is the documentation for the whole process.

One of the improvements in Entra workload identity for AKS, is the use of service account, which obviates the use of CRDs. Another improvement is the use of federated identity, whose lifecycle is tied to the cluster. This pattern is not only neater, but also standard. We map a service account to a managed identity with federated credential.

Summary

On managed Kubernetes services, we need an integration mechanism to grant Kubernetes workload access to cloud resources. We discussed what’s needed in this integration mechanism and looked at Azure Kubernetes as an example. In the next post, we’ll discuss how this issue is addressed in Elastic Kubernetes Service on the AWS side.