I touched on NFS in several previous postings, and here is a deeper dive of this particular protocol. NFS is built on top of Remote Procedure Call (RPC) and therefore it is important to understand RPC first. In fact NFS is one of the most prominent user of RPC and the best example for learning RPC.

RPC overview

According to Wikipedia, an RPC is when a computer program causes a procedure to execute in a different address space (commonly on another computer on a shared network), which is coded as if it were a normal (local) procedure call, without the programmer explicitly coding the details for the remote interaction. That is, the programmer writes essentially the same code whether the subroutine is local to the executing program, or remote. This is a form of client–server interaction (caller is client, executor is server), typically implemented via a request–response message-passing system. In the object-oriented programming paradigm, RPCs are represented by remote method invocation (RMI), such as Java RMI API.

RPCs are a form of inter-process communication (IPC), in that other processes have a different address spaces: if on the same host machine, they have distinct virtual address spaces, even though the physical address space is the same; while if they are on different hosts, the physical address space is different.

RPC is a request–response protocol, and therefore synchronous. An RPC is initiated by the client, which sends a request message to a known remote server to execute a specified procedure with supplied parameters. The remote server sends a response to the client, and the application continues its process. While the server is processing the call, the client is blocked (it waits until the server has finished processing before resuming execution), unless the client sends an asynchronous request to the server. There are many variations and subtleties in various implementations, resulting in a variety of different (incompatible) RPC protocols.

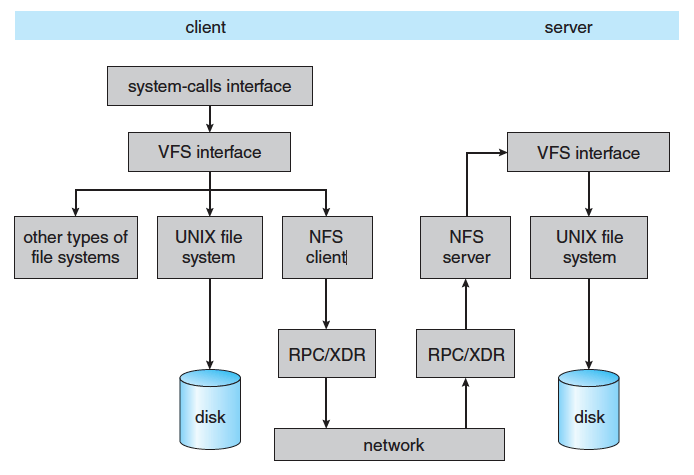

NFS overview

NFS is defined as a set of RPCs, including their arguments, results and effects. RPC makes NFS protocol transparent. RPC is also stateless so the server does not keep the state of RPCs once the request has been served. Each RPC contains the necessary information to complete the call. In the event of server failure, client will need to resubmit requests. NFS has several versions, with v3 and v4 most popular. We will focus on v3 in this posting and brief on v4.

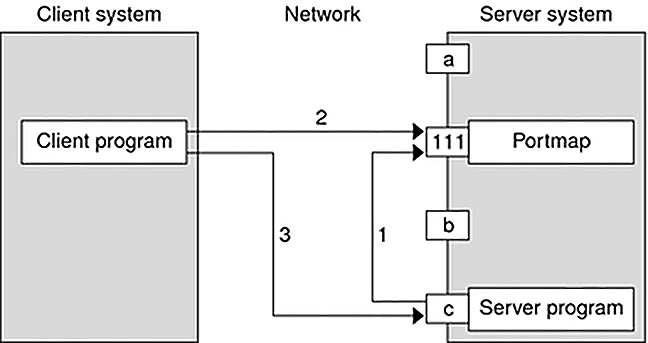

Portmap

RPC makes a remote call appears to client application as a local call, with the help of portmap. The utility for portmap is rpcbind. In RedHat/CentOS 5 or prior, it was even simply called portmap but they are essentially the same service for RPC port mapper.

The rpcbind service is required on both NFS client and NFS server. On the client, it talks to client application, as well as its counterpart on the server. Its main function is query its counterpart on the server, providing a RPC program number, and expects a port number in return. On the server, the rpcbind utility listens at port 111, waiting for request with RPC program number(service), and return the TCP or UDP port number on which the requested service is hosted. RPC program number is reserved numeric indicator of services as outlined in RFC5531. For example, 100005 for mountd, 100021 for nlockmgr, and 100003 for nfs.

The port that rpcbind service itself listens on is always at port 111, which is known to both client and server. This is also referred to as portmapper daemon. Other than this fixed port, each NFS-related service (with their respect reserved program number) may be hosted on different ports on the server. The client rpcbind service must first look up for the port for the requested program number, then it directs the client to initiate connection to the specified port for the specific service.

With the following command rpcinfo command you may look up the program to port number mapping on destination server isilon.company.com

# rpcinfo -p isilon.company.comThe following command is commonly used for displaying mountpoint and troubleshooting mount. Under the hood it is using the information from rpcinfo.

showmount -e isilon.dcb.digitalhunch.comOn the NFS server side, rpcbind service must start before nfs service start. Otherwise nfs service cannot register ports to rpcbind. If you restart rpcbind, every service that had registered to rpcbind must restart to register themselves again. By default, NFS server can dynamically assign a port for mountd, nlockmgr within a port range. This makes firewall setting a pain. These dynamically assigned port can be fixed via configuration files.

File handle

NFS uses file handles (or fhandle) to represent files. It is a better mechanism to reference a file object than pathname for three reasons: 1. file handle has fixed length (32bytes); 2. If the file is renamed, the file handle reference remain the same; 3. If a file is deleted, and then a new file is created with the same path, a new file handle will be created. A file handle has three parts:

- volume ID: to identify the mounted file system

- inode #: to identify the file within the mounted file system

- generation #: to detect when file handle refers to an older version of inode. Traditional Unix filesystems may reuse inode and thus NFS client could mistakenly use an old file handle and access a new file.

The file handle information is only meaningful to the server. New file handles are returned to client by certain procedures, such as LOOKUP, CREATE, and MKDIR. The file handle for the root of the file system, is obtained by the client when it mounts the file system, as permission allows.

Permission and Locking

When accessing a file on server, the client passes uid/gid info in RPCs, and the server performs permission checks as if the user was performing the operation locally. So users and groups are represented as integers. There are two security problems:

- The mapping from uid/gid to user must be the same on all clients. This is not practical in large deployment, although can be solved via Network Information Service (NIS);

- Whether the root user on the client has root access to files on the server, is a server policy configuration. This can be addressed by enabling “root squashing” on server, so that client’s uid 0 (root) is mapped to 65534 (nobody).

Unix has two locking mechanisms (fcntl and flock). NFS protocol supports fcntl but not flock. The flock function is managed by a separate service (nfslock) to allow NFS to lock files. The nfslock daemon provides the ability to lock regions of NFS files. NFS service itself is still completely stateless with locking managed separately. This is changed in NFSv4.

Procedures used in NFS service

NFS service defines a list of procedures. Here is a list with brief summary of activities. The bottom five RPCs are introduced in v3.

| Procedure | Activity |

| GETATTR(fh) | Returns the attributes of a file, similar to stat syscall. |

| SETATTR(fh, attr) | Sets the attributes of a file (mode, uid, gid, size, atime, mtime); setting the size to 0 truncates the file |

| STATFS(fh) | Returns the status of a filesystem, such as block size, number of free blocks. e.g. df command. |

| LOOKUP (dirfh, name) | Returns fhandle and attributes for the named file in the directory specified by dirfh |

| READ (fh, offset, count) | Reads from a file, with offset and count specified. In v2, the length is up to 8192 bytes; v3 support more. |

| WRITE (fh, offset, count, data) | Writes to a file, with offset and count specified, as well as a separate field called data. Returns the new attributes of the file after the write. |

| CREATE (dirfh, name, attr) | Creates a file with the name, in directory, returns new fhandle and attributes |

| REMOVE (dirfh, name) | Deletes the named file in from directory dirfh and returns status. |

| RENAME (dirfh, name, tofh, toname) | Renames name in directory dirfh, to toname in directory tofh. |

| LINK (dirfh, name, tofh, toname) | Creates a hard link toname, in directory tofh, that points to name, indirectory dirfh. |

| SYMLINK (dirfh, name, string) | Creates a symbolic link name, in the directory dirfh, with value string. |

| READLINK (fh) | Reads a symbolic link and get file name of the target. |

| MKDIR (dirfh, name, attr) | Creates a directory name in the directory dirfh, and returns the new fh and attributes. |

| RMDIR(dirfh, name) | Removes a directory with the name, from parent directory dirfh. |

| READDIR (dirfh, cookie, count) | Reads a directory and returns up to count bytes of directory entries from the directory dirfh. The cookie is used in subsequent readdir calls to start reading at a specific entry in the directory. Cookie of zero get the server to start with the first entry in the directory. |

| NULL | No activity. Used for testing only. |

| ACCESS | Helps with client caching. |

| MKNOD | Makes a device special file. |

| FSINFO | Returns information about the server’s capabilities. |

| READDIRPLUS | Returns both file handle and attributes to eliminate LOOKUP calls when scanning a directory |

| COMMIT | In NFSv3, the server can reply to WRITE RPCs immediately without syncing to disk. When client wants to ensure that the data is on stable storage, it sends a COMMIT RPC. This is used in asynchronous writes for better performance, which is an option negotiated at mount time. |

The addition of COMMIT procedure in v3 offers the option to improve write performance in place of synchronous write. However, asynchronous write requires more coordination to ensure data integrity during transmission, in the event of server crash. NFSv3 uses write verifier for this purpose. A write verifier is an 8-bye value that the server must change if it crashes.

- After an asynchronous write, the reply from WRITE RPC includes a write verifier, the client must keep it for later use;

- The client then sends a COMMIT RPC and the reply contains another write verifier;

- The client compares the verifiers from the two returns for crash detection. If the verifiers don’t match, the client must rewrite all uncommitted data.

- The client must keep all uncommitted data in case of a server crash.

Additional daemon processes

In addition to the three essential services, (nfs, rpcbind and nfslock), there are several auxiliary processes that facilitates NFS services. Their functions are listed here:

| Process | Description |

| rpc.mountd | Used by NFS server to process MOUNT requests from NFSv3 client. It checks that the requested NFS share is currently exported by the NFS server, and that the client is allowed to access it. If the mount request is allowed, the rpc.mountd server replies with a Success status and provides the File-Handle for this NFS share back to the NFS client. |

| rpc.nfsd | Allows explicit NFS versions and protocols the server advertises to be defined. It works with the Linux kernel to meet the dynamic demands of NFS clients, such as providing server threads each time an NFS client connects. This process corresponds to the nfs service. |

| rpc.lockd | A kernel thread which runs on both clients and servers. It implements the Network Lock Manager (NLM) protocol, which allows NFSv3 clients to lock files on the server, using procedures such as NLM_NULL, NLM_TEST, NLM_LOCK, NLM_GRANTED, NLM_UNLOCK, NLM_FREE. The service is started automatically whenever the NFS server is run and whenever an NFS file system is mounted. |

| rpc.statd | This process implements the Network Status Monitor (NSM) RPC protocol, which notifies NFS clients when an NFS server is restarted without being gracefully brought down. rpc.statd is started automatically by the nfslock service, and does not require user configuration. This is not used with NFSv4. |

| rpc.rquotad | This process provides user quota information for remote users. rpc.rquotad is started automatically by the nfs service and does not require user configuration. |

| rpc.idmapd | provides NFSv4 client and server upcalls, which map between on-the-wire NFSv4 names (strings in the form of user@domain) and local UIDs and GIDs. For idmapd to function with NFSv4, the /etc/idmapd.conf file must be configured. At a minimum, the “Domain” parameter should be specified, which defines the NFSv4 mapping domain. If the NFSv4 mapping domain is the same as the DNS domain name, this parameter can be skipped. The client and server must agree on the NFSv4 mapping domain for ID mapping to function properly. |

NFSv4

Even NFSv4 was introduced in 20 years ago, it improves access and performance of NFS on the Internet. It should be the default option for any new deployment.

- NFSv4 is TCP only protocol and it is stateful.

- NFSv4 combines mount and lock protocols into NFS so only one port is being used.

- Users and groups are identified with strings (user@domain, or group@domain where domain represents a registered DNS domain or sub-domain), instead of integers. The access control policies are compatible with both Unix and Windows.

- NFSv4 mandates strong RPC security built on cryptography, with negotiation at the time of mount

- NFSv4 adopted a framework for authentication, integrity and privacy at RPC level

- Introduced new RPC COMPOUND, which allows for several operations in one go. At the server, operations are evaluated in order, and each has a return value.

NFSv4.1 was release in 2010, and 4.2 in 2016. Both AWS EFS and Azure File storage supports 4.1.