In Nov 2020, I created OrthWeb project, a deployment of Orthanc’s server. Orthanc is a DICOM viewer and repo shipped in Docker container. In the deployment project, I use Terraform to provision infrastructure, including a managed PostgreSQL instance, an EC2 instance for docker runtime, and the init script to bring up the web service. I whipped up the project for a demo, and skipped some security configurations. For example, the password was stored in clear text in Terraform configuration. The web certificate is stored in the repository. I recently had some time to fix that. My effort leads up to the conclusion that this requires a better platform (i.e. managed Kubernetes cluster). So I wanted to note down how I got there.

Secret store

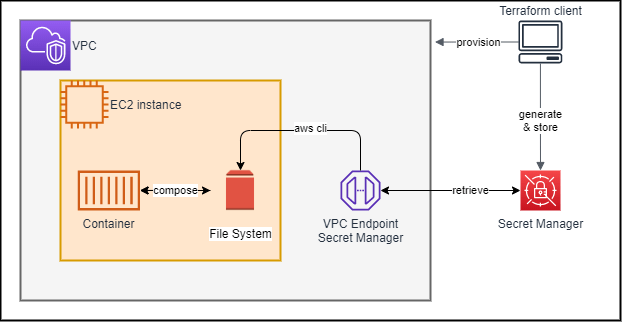

In AWS, both parameter store and secret manager can act as secret store. Secrets manager comes at higher cost but some additional features, such as built-in password generator, secret rotation, and cross-account access. We use Secret Manager but we generate password within Terraform because we need to specify password during database provisioning. Secret store requires certain special characters to be eliminated. Terraform can specify the special characters allowed. For EC2 instance to pull from secret manager, the following entities are needed:

- A secret store

- A VPC endpoint to expose secret store to subnet via private route.

- The VPC endpoint needs its own security group

- The instance profile of the EC2 instance must contain an IAM role to get secret value

- The security group of EC2 instance needs to allow traffic to secret store

- The script from EC2 instance uses VPC endpoint

This is a common pattern for interaction between computing object and VPC endpoint. The details are in compute.tf, network.tf, secgrp.tf and secret.tf. The secret name needs to be partially randomized to avoid naming conflict with deactivated secrets.

Passing Secret to container

Here is an example CLI command to pull secret:

$ aws secretsmanager get-secret-value --secret-id DatabaseCreds51c1db4172ae9c54 --query SecretString --output text --endpoint-url https://vpce-0897b168cf1c60df2-khx32o7f.secretsmanager.us-east-1.vpce.amazonaws.com | jq -r .password

The connection is made via private network route (whether the instance is in public or private subnet). Traffic is encrypted in TLS. Once in the operating system, the secret is available as standard output and can be stored to file, or saved in environment variable.

My first attempted approach is docker’s secret store and config so that I do not have to store secret in plain text on the file system. I eventually give up this approach due to several hiccups. First, secret and config are part of Docker swarm service. So it requires initializing docker swarm before I could port in the secret, with the following command:

$ docker swarm init

$ echo mdbuser123 | docker secret create db_un -

$ echo m1p@ssw0rd | docker secret create db_pw -

$ echo 10.2.32.41 | docker config create db_ep -

The content of the secret and config are presented as files to the container file system at different locations, as can be verified this way:

$ docker service create --name="redis" --secret=db_un --secret=db_pw --config=db_ep redis:alpine

$ docker container ls

$ docker exec -it c8ed2a278ca8 sh

# cat /db_ep

# cat /run/secrets/db_un

# cat /run/secrets/db_pw

This is a great way to pass secret and config to container applications. However, since the values are stored as content of file, the main application must be able to load file content as its own configuration value. In my specific scenario, the application expects explicit value in its configuration file, or environment variable.

On the other hand, Docker document states that docker secrets do not set environment variables directly. this was a conscous decision, because env var can unintentionally be leaked between containers. In other word I could present secrets as files but the application cannot use it. There is potentially a workaround here which is great function wise but an additional layer of complexity.

Moreover, I later discovered that this isn’t even a viable approach if I use docker compose. This is because I must declare those entries from secret store or config store as external, and external secrets are not even available to containers created by docker-compose.

With reluctance, I store the config and secret keys and values to a file, and use the env_file section in docker compose to import them as environment variables. The application can pick up environment variables as configuration values.

X509 Certificate

We use a self-signed X509 certificate, along with the private key. The creation is straightforward. However, when I tested on Mac, the browser does not load the page for this reason. Since macOS 10.15, the certificate requires several extensions: ExtendedKeyUsage, Subject alternative names and DNS name. The native openssl from the operating system is outdated (v 1.0.2) and I had to install openssl11 package and create it as follows:

openssl11 req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /tmp/private.key -out /tmp/certificate.crt -subj /C=CA/ST=Ontario/L=Waterloo/O=Digihunch/OU=Imaging/CN=digihunch.com/[email protected] -addext extendedKeyUsage=serverAuth -addext subjectAltName=DNS:orthweb.digihunch.com,DNS:digihunch.com

The Mac uses libreSSL backed openSSL utility and can achieve the same with slightly different command line argument.

Next Step

The limitation with passing secret concerns me and I’m looking to move to managed Kubernetes platform where secrets can be ported to environment variable of Pods. We can also consider ECS in AWS which allows to inject sensitive data from secret manager to container.