This post discusses admission control, and its implementation – the OPA Gatekeeper. I also discuss Azure Policy as a different Gatekeeper implementation.

Admission Webhooks

Admission controller intercepts requests to the Kubernetes API server after the request has been authenticated and authorized, and prior to persistence of the object into etcd store. There are many compiled-in controllers, which can be turned on and off on the node with the arguments of kube-apiserver process. For example, the ImagePolicyWebhook can be enabled with value ImagePolicyWebhook added to the –enable-admission-plugins switch. Its configuration can be provided via the –admission-control-config-file switch.

In addition to the compiled-in admission plugins (which must be configured for kube-apiserver process on the node), admission plugins can be developed as extensions and run as webhooks configured at runtime. This allows users to configure webhooks via API access, dynamically without having to restart kube-apiserver process on the Node, which is usually hard to do with managed Kubernetes platforms. They are therefore called Dynamic Admission Control.

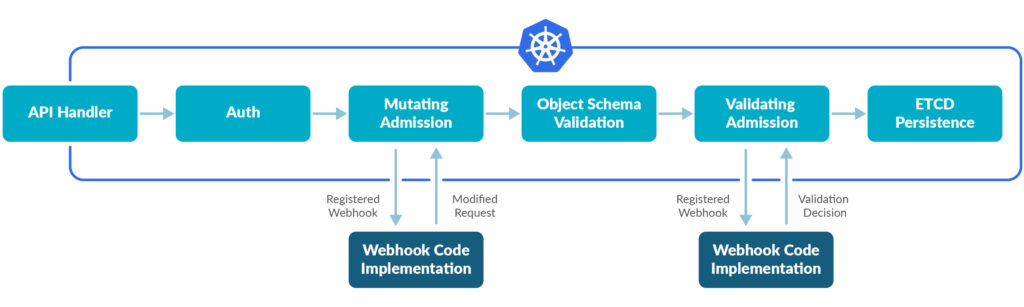

You can define two types of admission webhooks in dynamic admission control: validating admission webhook, and mutating admission webhook. Their interaction with API server can be illustrated in the diagram below:

The mutating admission hook takes action to change the API request, whereas the validating admission hook accepts or denies the request.

A good example of mutating webhook is Istio’s sidecar injector. We can view the configuration with this command:

$ kubectl get MutatingWebhookConfiguration istio-sidecar-injector -o yaml | less

From the manifest returned, we can see that in this configuration the request is forwarded to istiod service on port 443, at path /inject for processing. We can also see some matching rules to find the target Pod creation API request.

Validating webhook can be display with the following call:

$ kubectl get ValidatingWebhookConfiguration

The output of validating webhook is a yes or no decision. We usually use validating webhook in conjunction with a policy engine to decide whether the request should be accepted or denied.

Open Policy Agent

Open Policy Agent (OPA) is an open-source general-purpose policy engine that applies policies written in Rego language to ingested JSON document and returns a result. It is usually integrated with system which requires a policy engine. For example, Kyverno is a policy engine designed for Kubernetes. Styra (one of the OPA contributors) develops policy engines to integrate with Istio’s authorization policy. They have online courses on OPA policy authoring and microservice authorization with their product.

OPA is build to be a general-purpose, unified way of solving policy and authorization problem. With microservice authorization, the activities includes decision making (determine action based on input, aka Policy Decision Point, PDP), and decision enforcement (issue 400 code or 200 code depending on decision, aka Policy Enforcement Point, PEP). OPA is introduced to decouple these two activities. OPA’s input is a JSON payload and it uses Policy in Rego language to come to decision.

The team that developers Open Policy Agent also created their controller (with OPA as the core component) to run validating web hook and mutating web hook. The original version is OPA-Kubernetes that uses kube-mgmt. This original version is also dubbed Gatekeeper v1.0. When OPA starts, the kube-mgmt sidecar container will load Kubernetes Namespace and Ingress objects into OPA. You can configure the sidecar to load any kind of Kubernetes object into OPA. The sidecar establishes watches on the Kubernetes API server so that OPA has access to an eventually consistent cache of Kubernetes objects. It has gone through a couple of major version changes as summarized in this section. As of today, when we deploy Gatekeeper we should use version 3.

Gatekeeper v3

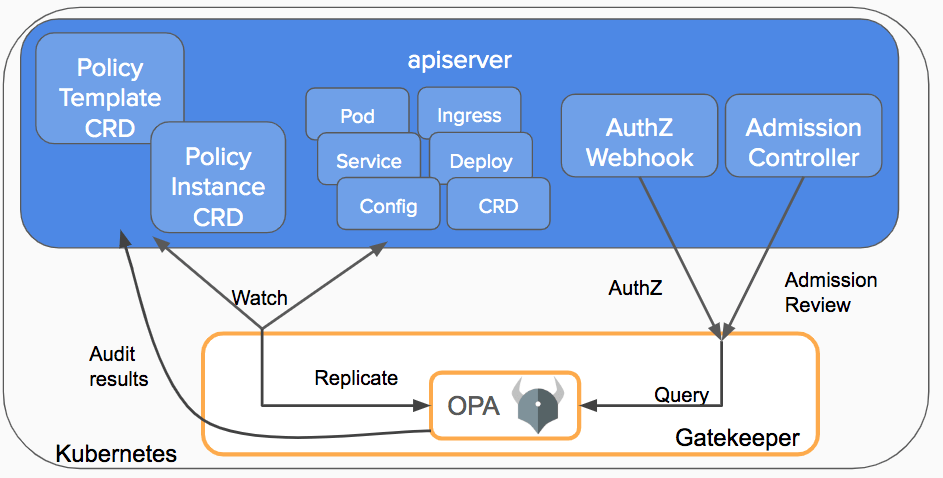

Currently, Gatekeeper v3 is the most popular choice for Kubernetes Policy Controller. The diagram bellow illustrate how Gatekeeper integrates with Kubernetes API server.

We can follow this guide to install Gatekeeper but the key step is as simple as to apply the correct version of manifest. Alternatively it can be installed using Helm. After the installation, we should see a Service named gatekeeper-webhook-service in the gatekeeper-system namespace. We can also inspect the newly created validationg web hook configuration

k get validatingwebhookconfiguration gatekeeper-validating-webhook-configuration -o yaml | less

The result indicates that the configuration forwards incoming manifests to the gatekeeper-webhook-service web service at the path /v1/admin for validation, and then at /v1/admitlabel for labelling. The configuration also stores rules as matching criteria.

We can smoke test Gatekeeper v3, with the basic example in its directory. Apply the template, constraint and then the manifests in resources. The pod creation will fail with an error like:

Error from server ([pod-must-have-gk] you must provide labels: {"gatekeeper"}): error when creating "resources/bad_pod_namespaceselector.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [pod-must-have-gk] you must provide labels: {"gatekeeper"}

The gatekeeper document also covers the details of using ConstraintTemplate and Constraints. However, Writing your own a policy in Rego still takes time and we want to piggyback on the community for commonly used policies. OPA‘s gatekeeper-library projects keeps a handful of those in its library directory. We can test the privileged container example:

$ cd gatekeeper-library/library/pod-security-policy/privileged-containers

$ kustomize build . | kubectl apply -f -

constrainttemplate.templates.gatekeeper.sh/k8spspprivilegedcontainer created

$ kubectl apply -f samples/psp-privileged-container/example_disallowed.yaml

pod/nginx-privileged-disallowed created

$ kubectl delete -f samples/psp-privileged-container/example_disallowed.yaml

pod "nginx-privileged-disallowed" deleted

$ kubectl apply -f samples/psp-privileged-container/constraint.yaml

k8spspprivilegedcontainer.constraints.gatekeeper.sh/psp-privileged-container created

$ kubectl apply -f samples/psp-privileged-container/example_disallowed.yaml

Error from server ([psp-privileged-container] Privileged container is not allowed: nginx, securityContext: {"privileged": true}): error when creating "samples/psp-privileged-container/example_disallowed.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [psp-privileged-container] Privileged container is not allowed: nginx, securityContext: {"privileged": true}

Currently the library directory contains two sub-directories, general and pod-scurity-policy. The latter is to regulate Pod creation, while the former includes more common usecases such as disable node port, enforce https, and enforce probes. This is the place I start with when building a policy.

The policy constraints take effect cluster wide. When we have multiple clusters, we would like a unified place to manage policies.

Azure Policy with AKS

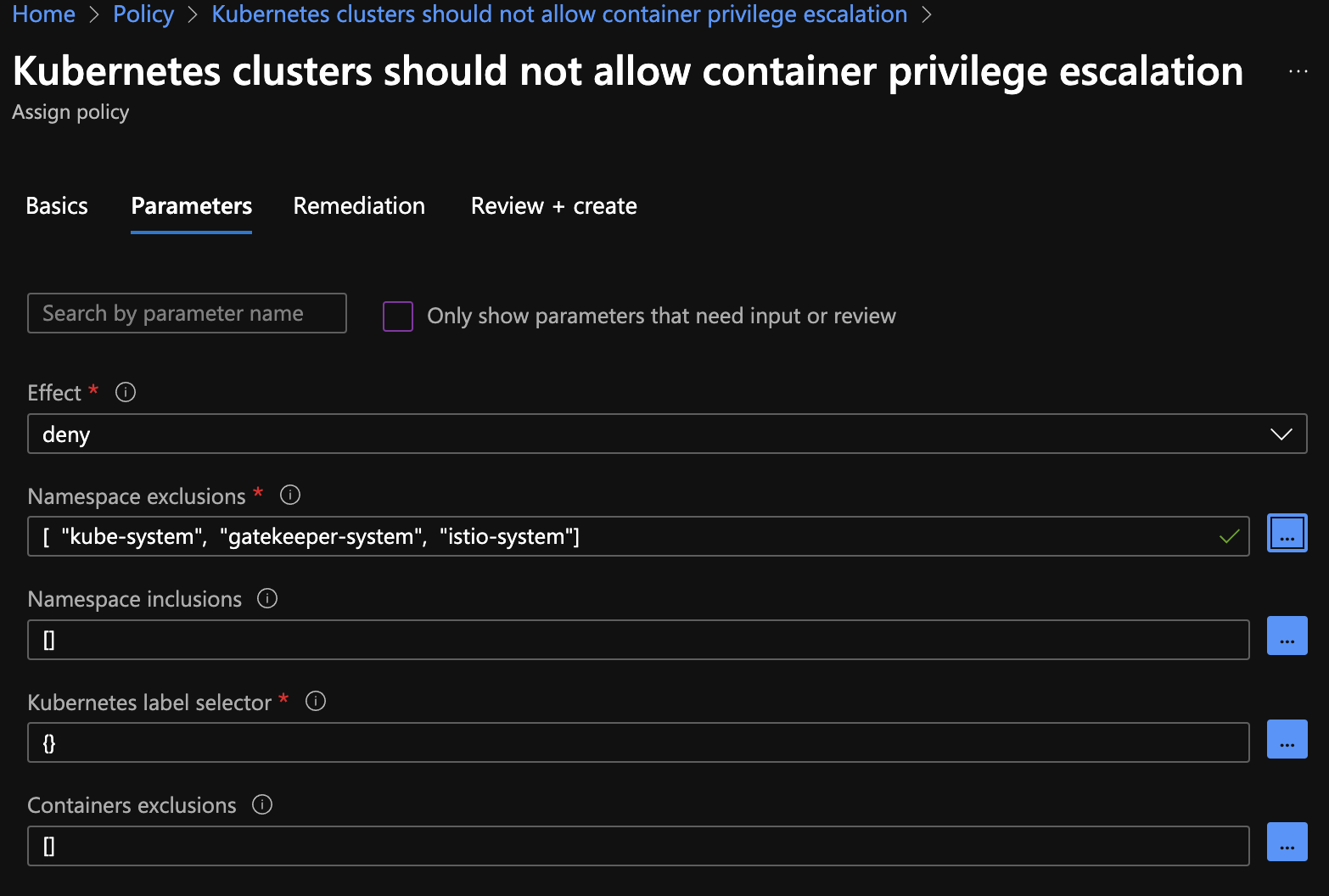

We take Azure Policy with AKS as an example to illustrate how public cloud platform can simplify policy management. When building AKS cluster, an addon profile for Azure Policy can be installed. This allows Azure Policy to connect to the AKS cluster. Azure Policy contains many built-in policies definitions (as well as initiative definitions which are groups of related policies). We can simply search by Kubernetes keyword and look for the built-in policies. For example, there is a built-in policy definition “Kubernetes clusters should not allow container privilege escalation. The definitions (policy or initiative) can be assigned to a resource group with enforcement action set to denied, and with excluded namespaces, as shown in the screenshot below

The assignment can take as long as 10 minutes to push down to the cluster. Then we should be able to confirm by checking the constraint CRDs. We can see this This setup brings a centralized policy management system that can be easily hooked up to multiple clusters.

Other benefits of this architecture includes the ability to report compliance. As per CIS report for Azure AKS recommendation 4.3:

Azure Policy extends Gatekeeper v3, an admission controller webhook for Open Policy Agent (OPA), to apply at-scale enforcements and safeguards on your clusters in a centralized, consistent manner. It covers many basic resource types but does not cover any well-known CRDs. Azure Policy makes it possible to manage and report on the compliance state of your Kubernetes clusters from one place.

- Checks with Azure Policy service for policy assignments to the cluster.

- Deploys policy definitions into the cluster as constraint template and constraint custom resources.

- Reports auditing and compliance details back to Azure Policy service.

As of February 2022, AWS EKS doesn’t seem to have the equivalent of this capability to integrate with a policy management. The only option would be to install Gatekeeper v3 yourself on the cluster, or host it separately.

Bottom line

Admission control should be a standard setup in Kubernetes deployment. When building gatekeeper system on your own, it can be set up separately on a different cluster. When Kubernetes is provided as a platform, it is very helpful for platform operator to manage their tenants. If the tenant is application development team, it also makes sense for them to develop their own policies for the developers in their team.