We covered hypervisor in previous post. In this article we focus on the virtualization of graphics computing resource.

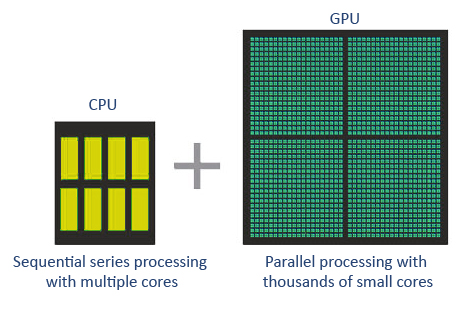

GPU vs CPU

GPU is a specialized type of microprocessor primarily designed for quick image rendering. GPU appeared as a response to graphically intense applications that put a burden on the CPU and degrated computer performance. They became a way to offload those tasks from CPUs, but modern graphics processors are powerful enough to perform rapid mathematical calculations for many other purposes apart from rendering.

CPU consists of a few cores (up to 23) optimized for sequential serial processing, which is designed to maximize the performance of a single task within a job. GPU uses thousands of smaller and more efficient cores for massively parallel architecture aimed at handling multiple functions at the same time. Typical uses cases for GPUs, in addition to graphics display, includes Games, 3D visualization, Image processing, big data and deep machine learning.

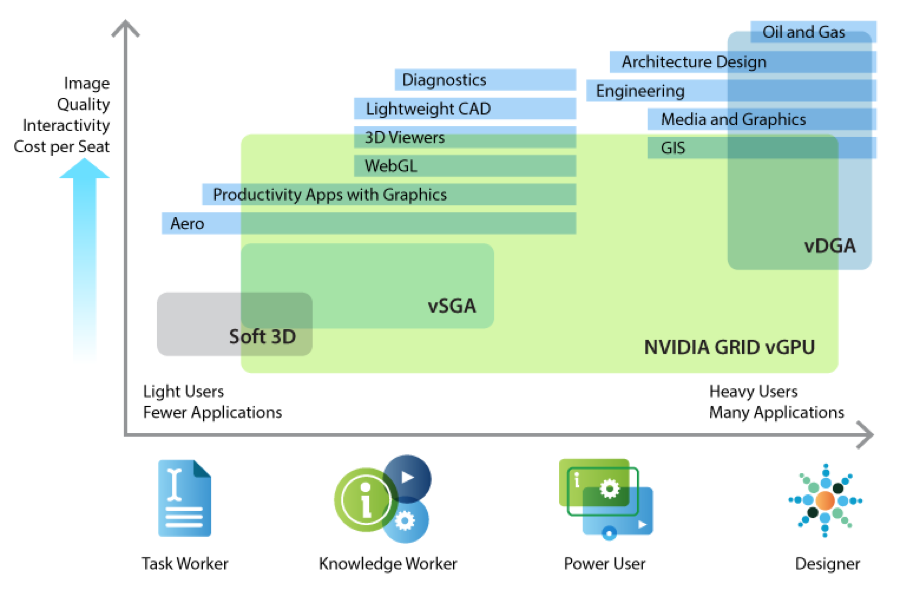

Moving to virtualization world, the most primitive mechanism for graphics acceleration is Soft 3D, which is commonly used in virtual desktops, or DaaS (desktop as a service). The Software 3D renderer (Soft 3D) uses the Soft 3D graphics driver to provide support for software-accelerated 3D graphics without any physical GPUs being installed in the ESXi host. With respect to GPU in virtualized environment, VMware developed a few technologies.

vSGA (Virtual Shared Graphics Acceleration)

The physical GPUs in the server are virtualized and shared across multiple guest VMs. This option involves installing an Nvidia driver into the hypervisor itself, and each guest VM uses a proprietary VMware SVGA 3D driver that communicates with the Nvidia driver in ESX. The biggest limitation here is that these drivers only work with DirectX up to 9.0c, and OpenGL up to 2.1. This technology was introduced in early 2013 and is used in light workload for knowledge worker, such as PowerPoint, Visio and web browsing.

vDGA (Virtual Dedicated Graphics Acceleration)

vDGA, also known as “GPU passthrough”. It provides each VM with unrestricted, fully dedicated access to one of the host’s GPUs. The hypervisor is drilling a direct hole in itself between the GPU and the guest. This technology allows you to present an internal PCI GPU directly to a VM guest. The device acts as if it were directly driven by the VM guest, and the guest detects the PCI device as if it were physically connected, using the “real” driver. There is no special drivers in the hypervisor. vDGA offers the highest level of performance for users with the most intensive graphics computing needs.

The main advantage to vDGA is that since the GPU is passed through to the guest and the guest uses regular Nvidia drivers, it fully supports everything the Nvidia driver can do natively. This enables all versions of DirectX, OpenGL and even CUDA. The downside is that vDGA is expensive, since you need one GPU per user. There is also a lack of vMotion support.

VMware added support for vDGA in late 2013. The target market is high-end users with intensive graphical applications (oil&gas, scientific simulations, CAD/CAM, etc

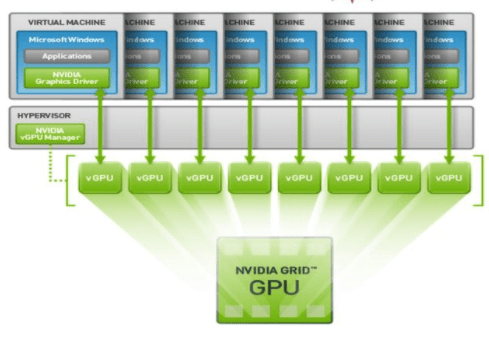

vGPU (Virtual GPU)

vGPU is also known as Virtual Shared Pass-Through Graphics Acceleration. This technology sites somewhere in between the two previously introduced, as an option to strike a balance between cost-effectiveness and resource-sharing. It is essentially vDGA but with multiple users per GPU, instead of one-to-one mapping. Like vDGA, with vGPU you install the real Nvidia driver in guest VMs, and the hypervisor passes the graphics commands directly to the hypervisor without any translation.

vGPU gives you all that plus the ability to share a GPU across up to 8 VMs. The idea of vGPU is that you get better performance than vSGA option, with a portion of cost when compared to vDGA. The use case for vGPU will be the higher-end knowledge workers who need real “GPU” access but don’t need full-on multi-thousand dollar graphics workstations.

VMware partners with Nvidia on vGPU development. Below is the use-case chart from previous VMware white paper:

The diagram below illustrates the architecture of virtual GPU (NVIDIA Grid):

The best white paper about the three technologies and their use cases is on VMware website.

Identify Graphics driver

On Linux VM, we can simply use lspci to identify graphics driver.

[root@ghrender ~]# lspci | grep VGA

03:00.0 VGA compatible controller: Matrox Electronics Systems Ltd. Integrated Matrox G200eW3 Graphics Controller (rev 04)

3b:00.0 VGA compatible controller: NVIDIA Corporation GP104GL [Quadro P5000] (rev a1)In the result, the far left column is specified domain, e.g. 3b:00.0

To display details on graphics card by specified domain (3b:00.0 for example) with memory information:

[root@ghrender ~]# lspci -v -s 3b:00.0

3b:00.0 VGA compatible controller: NVIDIA Corporation GP104GL [Quadro P5000] (rev a1) (prog-if 00 [VGA controller])

Subsystem: NVIDIA Corporation Device 11b2

Flags: bus master, fast devsel, latency 0, IRQ 190, NUMA node 0

Memory at ab000000 (32-bit, non-prefetchable) [size=16M]

Memory at 382fe0000000 (64-bit, prefetchable) [size=256M]

Memory at 382ff0000000 (64-bit, prefetchable) [size=32M]

I/O ports at 6000 [size=128]

[virtual] Expansion ROM at ac080000 [disabled] [size=512K]

Capabilities: [60] Power Management version 3

Capabilities: [68] MSI: Enable+ Count=1/1 Maskable- 64bit+

Capabilities: [78] Express Legacy Endpoint, MSI 00

Capabilities: [100] Virtual Channel

Capabilities: [250] Latency Tolerance Reporting

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] #19

Kernel driver in use: nvidia

Kernel modules: nouveau, nvidia_drm, nvidia

The lshw command can also identify onboard Intel/AMD or Nvidia dedicated GPU:

[root@ghrender ~]# lshw -C display

*-display

description: VGA compatible controller

product: Integrated Matrox G200eW3 Graphics Controller

vendor: Matrox Electronics Systems Ltd.

physical id: 0

bus info: pci@0000:03:00.0

version: 04

width: 32 bits

clock: 66MHz

capabilities: pm vga_controller bus_master cap_list rom

configuration: driver=mgag200 latency=64 maxlatency=32 mingnt=16

resources: irq:16 memory:91000000-91ffffff memory:92808000-9280bfff memory:92000000-927fffff

*-display

description: VGA compatible controller

product: GP104GL [Quadro P5000]

vendor: NVIDIA Corporation

physical id: 0

bus info: pci@0000:3b:00.0

version: a1

width: 64 bits

clock: 33MHz

capabilities: pm msi pciexpress vga_controller bus_master cap_list rom

configuration: driver=nvidia latency=0

resources: iomemory:382f0-382ef iomemory:382f0-382ef irq:190 memory:ab000000-abffffff memory:382fe0000000-382fefffffff memory:382ff0000000-382ff1ffffff ioport:6000(size=128) memory:ac080000-ac0fffff