In this post we compare Minikube, MicroK8s and KinD as different approaches to build multi-node cluster locally.

Is Docker desktop bad?

In the previous post about docker desktop as a single-node Kubernetes cluster setup, I touched on the deprecation of docker-shim. Now that CRI beats OCI as the standard for container runtime, the docker runtime will no longer be supported by Kubernetes. Also deprecated is docker-shim, the temporary interface that had make Docker runtime work in Kubernetes. This was announced in December 2020, and is coming through in Kubernetes 1.23, expected Oct 2021. However, docker desktop still uses docker runtime in it’s single-node Kubernetes cluster. This essentially renders itself a non-compliant Kubernetes environment.

Docker desktop still has great value for application developers. If your role is development, spending a lot of time coding business logics and need an easy-to-use container runtime on your laptop, Docker desktop is a good choice. The recent moves by the company seems to suggest that this is the business they are targeting now. On the other hand, if your roles are deployment, automation, orchestration, cloud native etc and you are looking for a playground, most likely you do need a runtime compliant to Kubernetes CRI. Docker desktop is not a CNCF-certified project anymore, and it is not your choice.

Alternatives

There are a number of alternatives, the most well-known ones are Minikube, MicroK8s, KinD and K3s with K3d. This presentation from CNCF in 2020 covers a lot of details about these technologies. I’ll try to add my opinion.

K3s is Rancher Lab’s lightweight Kubernetes distribution that supports multi-node cluster as well as different runtimes (e.g. containerd). It is not straightforward to setup, and k3d is an command-line wrapper to make it easy to install K3s cluster. K3s was accepted as a CNCF project but only at Sandbox maturity level, so it is not my choice.

The other three: Minikue, MicroK8s and KinD are all certified CNCF project. I will further discuss how to choose among them. These projects are technologies that takes different approach to address the challenges with deploying multiple nodes in local environment (e.g. my laptop).

The challenge with running a Kubernetes cluster with multiple nodes locally is how to manage these nodes. They are separate virtual resources that need to be isolated from computing perspective, and connected as a cluster. This is typically the use case of a Type II hypervisor, or alternatively, it can also be implemented with container technology. This layer of technology (referred to as drivers) makes a big difference.

Minikube

Minikube supports multiple drivers. Depending on your platform (Windows, Linux, or MacOS), the preferred driver is different. Refer to the document here for preferred driver, and this blog post for more instructions. In addition to the documents, here some notes from my personal experience:

- On MacOS, Minikube lists Docker as preferred driver. I disagree with that. If you have no other reason to install Docker, then I would recommend hyperkit as the the preferred driver. Hyperkit can be installed with a simple Homebrew command. For two reasons I do not recommend Docker as the driver of Minikube. First, it requires a separate installation of Docker Desktop, which includes a built-in instance of hyperkit on its own. This isn’t neat. Second, I often need Metal LB add-on with Minikube for testing Kubernetes Ingress. With Minikube on Docker, the Ingress ports are not exposed to MacOS’s. Therefore you cannot directly visit websites spun up on Minikube. This is a known issue for a while due to limitation on docker bridge with Mac. Some reported an ugly workaround with docker-mac-net-connect but I never got it to work.

- On Windows native environment, the preferred driver is hyper-V. The Minikube cli command have to run from Windows PowerShell.

- On WSL2, Minikube doesn’t play well, regardless of driver. The hyperkit driver won’t work (it is designed for MacOS only). The kvm2 driver would require a KVM2 hypervisor. However, WSL2 itself is a VM on top of hypervisor, as explained here. If KVM2 driver works it would require nested virtualization so I doubt it will ever be supported. As for Docker on WSL2 as driver, Minikube has it as an experimental feature, and requires configuring cgroup to allow setting memory. I am not confident with it.

To me, Minikube is the tool for MacOS (I have Intel processor). On MacOS, we first need to install minikube and hyperkit with home brew.

We can then start a kubernetes cluster, with minikube in a single command. I noticed a process on my MacBook called dnscrypt-proxy that conflicts with hyperkit DNS server when starting minikube. I had to remove dnscrypt-proxy (part of Cisco Umbrella Roaming Client) in order to get minikube to work, as this thread suggests. You can find out by running:

sudo lsof -i :53

If dnscrypt-proxy is running, find out the application by PID and remove the application. Otherwise there will be issues. Check out this section on the document. The commands that I use to start multi-node cluster is:

minikube start --driver=hyperkit --container-runtime=containerd --memory=12288 --cpus=2 --disk-size=150g --nodes 3

kubectl get po -A

kubectl describe node minikube|grep Runtime

Node administration is simple. To enable dashboard, simply run “minikube dashboard”. To SSH to a node, simply do “minikube ssh -n <node_name>”. In order to stop the node and delete cluster, run “minikube stop && minikube delete”.

There are some addons in minikube, for example, efk, gvisor, istio, metrics-server. To list add-ons, and enable metrics-server, for example, run:

minikube addons list

minikube addons enable metrics-server

When creating cluster, instead of specifying the cluster imperatively, the configuration (e.g. driver, container runtime, cpu, memory, number of nodes, etc) can be stored as a profile with -p switch. Like other Minikube configuration information, Minikube profiles are stored in ~/.minikube under the profile directory.

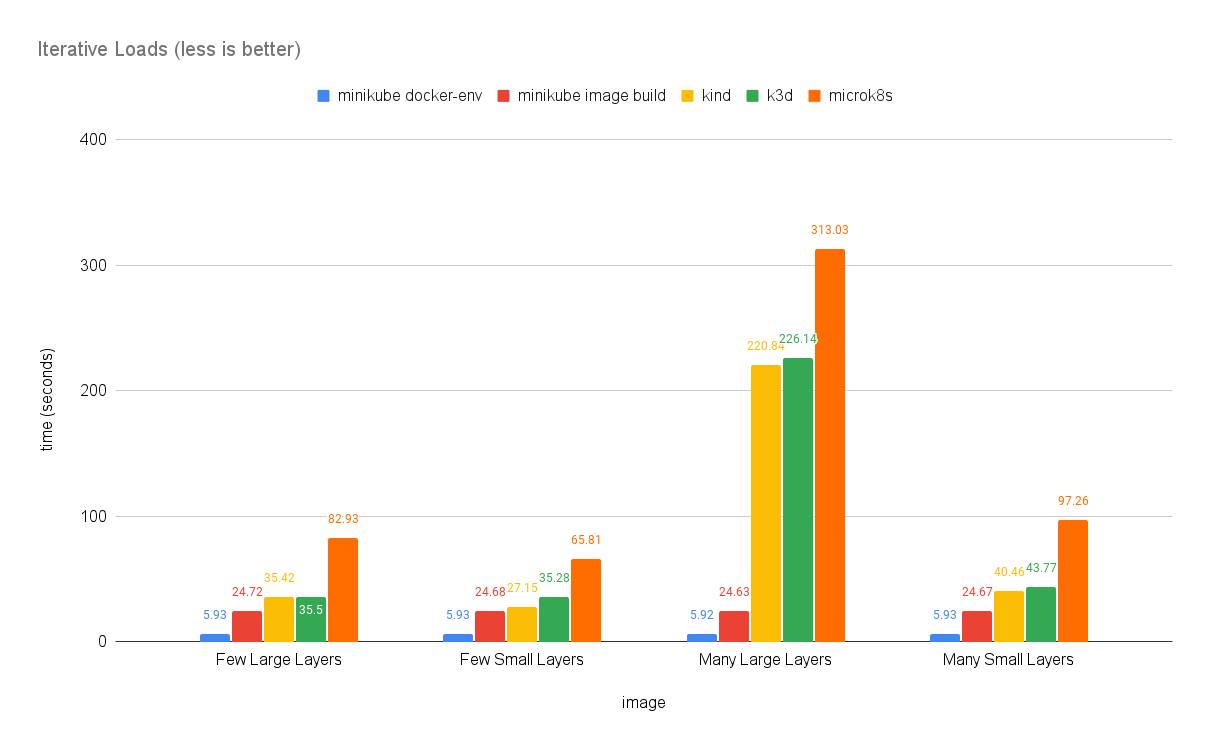

Minikube also has a page that benchmarks the performance of these technologies, where it presents itself as the most performant.

MicroK8s

MicroK8s is developed by Canonical. It can use either Multipass or LXD container as driver. Multipass can configure Ubuntu VMs using cloud-init. It supports multiple hypervisor backends as well but hyperkit is the default on MacOS, Hyper-V on Windows, and KVM on Linux.

MicroK8s supports multi-node configuration across multiple machines. That is, nodes can span across multiple physical machines. This is more powerful than Minikube where multiple nodes are on the same physical machine. It brings MicroK8s additional use cases such as edge and IoT devices.

With that capability comes the extra step to configure a MicroK8s cluster. You will need to manually join a node to a cluster because the new node is potentially located on a different machine, and you execute the command from the new machine. On the other hand, with Minikube you simply specify the number of nodes desired in a command or profile.

Snap is the native package manager to install MicroK8s, making GNU Linux (e.g. Ubuntu) the native platform. It also supports MacOS and Windows. MicroK8s does not rely on Docker (unlike KinD and Minikube with Docker as driver), and uses containerd as runtime.

MicroK8s comes with its own packaged version of kubectl, and you use that with “microk8s kubectl” command, which is not convenient. You can configure your host kubectl to point to the MicroK8s cluster, as an extra step.

Compared to the other two technologies, MicroK8s is more powerful in the sense that the cluster is build on nodes across multiple machines. However, it takes more step to configure even for a multi-node, single-machine environment. Refer to this post for the steps.

KinD

KinD is similar to Minikube with Docker as driver. It is more restricted than Minikube considering Docker is the only driver it supports. This makes it a requirement to have Docker installed locally.

Although KinD uses Docker to run nodes, it does not use Docker as its container runtime. Therefore it remains as compliant environment.

Another advantage of KinD is it supports Docker on WSL2 very well. Simply install KinD on WSL2 and start Docker. This blog post contains the steps required to install KinD vs Minikube on WSL2. There is a comparison table in the conclusion section that highlights the fact that it is much easier to install KinD with WSL2 than to install Minikube.

However, there are currently some known limitations with Docker desktop for Windows (including on WSL2). One is the absence of docker0 bridge. This means on Windows you cannot route traffic to the containers.

For cluster specification, KinD can configure a cluster declaratively using YAML file for example, the kind-config.yaml contains the following snippet:

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: worker

- role: worker

networking:

disableDefaultCNI: true

We can bring up a cluster with a command:

kind create cluster --config=kind-config.yaml

The command will also configure the kubectl context so we can check node with kubectl command. The file is in my real-quicK-cluster repo.

Conclusion

After reviewing the technologies that back up multi-node kubernetes cluster for my role, I find that Minikube with hyperkit is my favourite for MacOS. On WSL2, I prefer to use KinD. Since I do not use Windows native environment or Ubuntu on my laptop, I cannot make recommendations. However I would start with Minikube (with hypverv or kvm2 as driver).

Update July 2022: When the test workload involves persistent storage, KinD is a better choice. When the test workload involves load balancer. Minikube is a better choice.

As to storage provisioner, Minikube with storage-provisioner addon uses k8s.io/minikube-hostpath. KinD uses rancher.io/local-path. When I have to test workload with persistent storage (e.g. PostgreSQL with Crunchy pgo), I realized Minikube have permission issues with persistent volume, as discussed here as an issue with multiple nodes. The issue has been open since Aug 2021.

For Load Balancer, Minikube has metallb as an addon and I can configure it within a bash script conveniently. With KinD, I’d have to configure that in a few steps with both kubectl and Docker CLI commands and I was not able to connect to the load balancer by IP even after following the steps. So I tend to just use Minikube to test workload requiring load balancer and service mesh.

I find myself switch between Minikube and KinD on my MacBook depending on the test workload.