Background

In January, I wrote about FluxCD, and adopted it in the Korthweb project. I like the simple design of FluxCD, and I am comfortable with commands without using a web UI. Half a year later, I am re-visiting this choice, with ArgoCD in mind.

After reading numerous recent posts that compare the two (such as this one from the new stack), I started to give more thoughts on ArgoCD, for a couple reasons. First, ArgoCD has more contributing companies and public references. Red Hat adopted Argo CD as the underlying technology for OpenShift GitOps (and Tekton for pipeline). Second, ArgoCD has a mature UI. In corporate collaboration, especially with those not well-versed with command line, a UI is extremely helpful. Bundled with the UI, Argo CD also uses its own RBAC independent of Kubernetes RBAC, making it a great choice for continuous deployment for enterprise applications.

User Interface

You can choose to install just the core components, without UI, SSO and multi-cluster features. To me, it does not make sense because those features are exactly the reason to choose Argo CD. Argo CD also has an eponymous CLI tool, similar to flux for Flux CD. Since Argo CD uses its own RBAC independent of Kubernetes RBAC, Argo CD will have its own credential. The default username is admin and password needs to be retrieved from Secrets. In the tutorial we use this user’s credential to interact with the target cluster. This is different from Flux where the client simply uses kubectl configuration.

In the “Getting Started” guide, we create a namespace “argocd” and install it with manifests. Alternatively, we can use CLI tool to install it. With Argo CD, we can consider hosting it on a different port than the workload’s port, such as 8443. This port serves as “GitOps management port” separated from the application port (e.g. 443) for business workload. Just like any application on Kubernetes, we usually need to configure an Ingress on the management port.

In the argocd namespace, we can see a few services. The application set controller acts as the main controller that reconciles between actual state and desired state. There is also a redis server, for storing data in Argo CD. Another service to note is the dex server, indicating that the SSO capability is provided by the Dex project.

Features

In FluxCD, they use a CRD Kustomization with kustomize.toolkit.fluxcd.io/v1beta2 as version. This name is confusing. Luckily, in ArgoCD, the CRDs are Application (with argoproj.io/v1alpha1 version) and ApplicationSet (with argoproj.io/v1alpha1 version). The controllers monitors CRs created with these CRDs. ArgoCD defines Application as a group of Kubernetes resources as defined by a manifest. Argo CD Application resource deploys resources from a single Git repository to a single destination cluster/namespace. On the other hand, ApplicationSet uses templated automation to create, modify, and manage multiple Argo CD applications at once. ApplicationSet controllers monitors ApplicationSet resources. We can define a template within the declaration of an ApplicationSet and it allows for parameter substitution.

Another key capability for a GitOps utility is the support of multiple templating tools. FluxCD supports Helm and Kustomize. So do Argo CD. In addition, ArgoCD also supports jsonnet (ksonnet is not supported anymore). Jsonnet is a templating tool with many useful operators. Kubectl can directly consume Jsonnet’s JSON output as if it were YAML. In FluxCD, there are different CRDs for Helm and Kustomize (HelmRelease and Kustomization). In ArgoCD, the Application CRD covers Helm, Kustomize, and Jsonnet by embedding them as attributes in the declaration. This makes it even simpler than Flux CD.

Secret Management

It’s a big no-no to put secret in a code repository. Therefore any GitOps solution must solve the problem with secret logistics. What baffles me is ArgoCD remains un-opinionated on this matter. There appears to be some context for this stance. As a result, ArgoCD only points to a few third-party secret management solutions.

Secret logistics is a tricky problem with GitOps workflow. One of the techniques is sealed secret. In the repo we store the secret as encrypted by public key. The controller keeps the private key which decrypts the secret at the time of deployment. A few engineers don’t find it feasible for operation, as explained in this post and this post.

An alternative is to use external secret operator. Such operators will be able to sync a secret from external secret store such as Hashicorp Vault, AWS secret manager, Azure Key Vault, etc. This is much neater because we only store reference to secret in the repository. However, when the cluster assesses external secret store, it still requires either a secret, or a permission.

Another technique that drawing attentions is using sops for secret and kustomize-sops to integrate with ArgoCD. Mozilla’s sops (SecretOPerationS) project releases a binary utility called sops to encrypt and decrypt YAML manifest. It can encrypt only the values in YAML manifest and not the attributes (keys). We use sops to encrypt YAML files in order to store them in Git repo. We also use sops to decrypt YAML right before we deploy it with kubectl. The key pair for encryption and decryption can be stored in Azure Key Vault, AWS KMS, GCP KMS, and even age and pgp. Here is a simple tutorial.

Example

In a few command we can configure a minimal example. I first use KinD or Minikube to create a cluster following the steps in real-quicK-cluster repo. Then we configure an application using YAML manifest.

$ kubectl create namespace argocd

$ kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

$ kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo

$ kubectl port-forward svc/argocd-server -n argocd 8443:443

From MacOS, I can now browse to https://localhost:8443/ for ArgoCD UI, and login with admin user and the password as printed above. We can then use the UI to create an Application. Alternatively, we can configure an application using CLI utility:

$ brew install argocd

$ argocd login localhost:8443

$ argocd app create guestbook --repo https://github.com/argoproj/argocd-example-apps.git --path guestbook --dest-server https://kubernetes.default.svc --dest-namespace default

$ argocd app sync guestbook

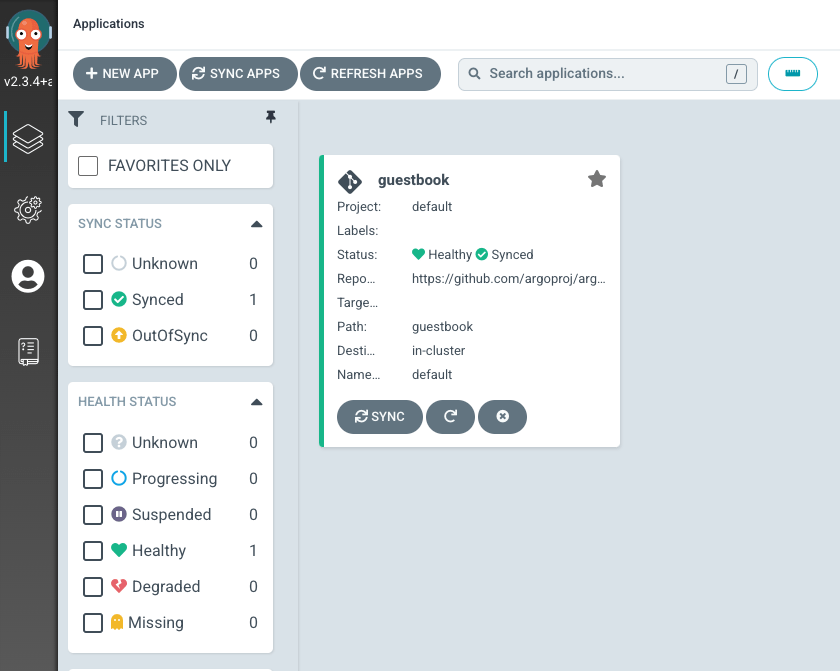

On the GUI we should see the application is sync’ed.

On the left panel, click on the gear icon for settings. Under Clusters, we can see the cluster URL, which we use as dest-server value above. We can add more clusters here.

Pick the right open-source project

My experience with ArgoCD and FluxCD raised an issue to reflect on. For the same problem, there are multiple choices of open-source project. What would be a good methodology to choose the right open-source technology? I would first look at the following factors:

- History

- Community size

- License model

- Enterprise support

- Governing model

The history and community size give a good idea of the technical maturity level. The License model has legal implications as to where you can use the technology. License model can change even after the project has been launched and even become not open-source any more. One example is Elasticsearch license change in Jan 2021, from the very open APLv2 to under dual license of both Elastic License (by Elastic) and Server Side Public License (SSPL, introduced by MongoDB). Neither license is certified by OSI as compliant with open source definition. For enterprises, apart from licensing, I’d also consider if any organization provides commercial support for the open-source product.

What is often neglected is the governing model. The governing model determines how product decisions are made that will shape the future of the project. For example, CNCF is a vendor neutral foundation for cloud computing and if an organization contributes a project to CNCF, then the member projects have to align with CNCF’s governing practices. Google also introduced an OUC (Open Usage Common) governing model.

Another good way is to just follow Red Hat’s choice. Red Hat built its business model around open-source technologies. They have to pick open-source project to provide enterprise level support so presumably they have a rigour selection process that one can piggyback off. Products supported by Red Hat are composed of open source components often vetted by multiple upstream communities, and changes made to these components are pushed to their respective upstream projects, often before they land in a supported product from Red Hat. Here are more insights in this business model.

Had I gone with the Red Hat rule, I would have picked Argo CD in the first place because that is the base of OpenShift GitOps!

Verdict

ArgoCD and FluxCD are tools for GitOps. However, just using a GitOps tool does not guarantee that all workload are deployed continuously. First, these GitOps controllers cannot replace Operators, which has domain knowledge about how to orchestrate their workloads. Second, resources not directly managed by these operators (such as those installed by Helm) are not being monitored continuously. For continuous deployment over all workloads, I recommend use operator to install third party applications, and use Argo CD or Flux CD to manage the operators.

GitOps makes use of the controller pattern to manage deployment in a continuous matter. Controller works closely with CRDs. ArgoCD uses similar set of CRDs to manage continuous deployment. What sets it apart is the user friendly web UI, the IAM integration and multi-cluster capabilities. These capabilities make ArgoCD well adapted in enterprise IT eco-system.