In the last two posts, I covered the native storage options on Azure Kubernetes Service, as well as Portworx as an example of a proprietary Software Defined Storage (SDS) solution. There are also a number of open-source alternative SDS solutions. Ceph has nearly a decade of history from prior to containerization, and is the most widely adopted storage platform. In this post, we continue to explore Ceph as an open-source storage solution on Azure Kubernetes.

Ceph by Rook

Ceph is an open-source SDS platform for distributed storage on a cluster and provides object, block and file storage. Installation of Ceph SDS can be complex, especially on Kubernetes platform. Rook is a graduated CNCF project to orchestrate storage platform. Rook by itself is not SDS and it supports:

- Ceph: configure a Ceph cluster. Think of this as the equivalent of cephadm on Kubernetes platform.

- NFS: configure an NFS server. Think of this as the equivalent of nfsd daemon on Kubernetes platform.

- Cassandra: an operator to configure a Cassandra database cluster. It is now deprecated.

We play with Rook Ceph. I also refer to it as Ceph by Rook. The contribution of Rook project is it simplifies the installation as a matter of declaring custom resources using CRDs. Here are some high-level CRDs to know:

- CephCluster: creates a Ceph storage cluster

- CephBlockPool: represents a block pool

- CephFilesystem: represents a file system

- CephObjectStore: represents an object store

- CephNFS: spins up a NFS Ganesha server to export NFS shares of a CephFilesystem or CephObjectStore.

As with typical Kubernetes resources in controller pattern, Ceph by Rook needs an operator along with custom resources. We can use YAML manifest for both of them, and the manifests are usually very tediously long. We can also use Helm to install both of them, by providing a value file. Now we will install Ceph on AKS.

Install Ceph Operator on AKS

The steps are influenced by two relevant posts (here and here). However, I’ve incorporated the cluster configuration in the Azure directory of the cloudkube project, a modular Terraform template to configure AKS cluster and facilitate storage configuration. The node group and instance sizes are selected to be just enough to run a ceph POC cluster with minimum cost. One of the node groups is tainted with storage-node, as if the following command were run:

kubectl taint nodes my-node-pool-node-name storage-node=true:NoSchedule

You will only need to taint the nodes with the command above if you choose not to use the cloudkube template. The taint ensures that only Pods with corresponding toleration and effect can be scheduled to those nodes.

We use Helm to install Rook Operator. We need a value file (e.g. rook-ceph-operator-values.yaml) with content as below:

# https://github.com/rook/rook/blob/master/Documentation/Helm-Charts/operator-chart.md

crds:

enabled: true

csi:

provisionerTolerations:

- effect: NoSchedule

key: storage-node

operator: Exists

pluginTolerations:

- effect: NoSchedule

key: storage-node

operator: Exists

agent:

# AKS: https://rook.github.io/docs/rook/v1.7/flexvolume.html#azure-aks

flexVolumeDirPath: "/etc/kubernetes/volumeplugins"

Then we install the operator with Helm:

helm install rook-ceph-operator rook-ceph --namespace rook-ceph --create-namespace --version v1.9.6 --repo https://charts.rook.io/release/ --values rook-ceph-operator-values.yaml

kubectl -n rook-ceph get po -l app=rook-ceph-operator

After installing the operator, we check the Pod status to make sure it is running. Then we can install the actual Ceph Cluster in one of the two ways. We can declare a CephClusterCRD ourself, or we can use Helm again to declare the CRD. Helm Chart gives us a lot of useful default values and saves us from editing a large body of YAML manifest.

Install Ceph CR on AKS

We use Helm to install CephCluster CRD. We create a value file (e.g. rook-ceph-cluster-values.yaml) with content as below:

# https://github.com/rook/rook/blob/master/Documentation/Helm-Charts/ceph-cluster-chart.md

operatorNamespace: rook-ceph

toolbox:

enabled: true

cephObjectStores: [] # by default a cephObjectStore will be created. Setting this to null disables it

#cephBlockPools: # by default a cephBlockPool will also be created with default values

#cephFileSystems: # by default a cephFileSystem will also be created with default values

cephClusterSpec:

mon:

count: 3

volumeClaimTemplate:

spec:

storageClassName: managed-premium

resources:

requests:

storage: 10Gi

resources:

limits:

cpu: "500m"

memory: "1Gi"

requests:

cpu: "100m"

memory: "500Mi"

dashboard:

enabled: true

storage:

storageClassDeviceSets:

- name: set1

# The number of OSDs to create from this device set

count: 3

# IMPORTANT: If volumes specified by the storageClassName are not portable across nodes

# this needs to be set to false. For example, if using the local storage provisioner

# this should be false.

portable: false

# Since the OSDs could end up on any node, an effort needs to be made to spread the OSDs

# across nodes as much as possible. Unfortunately the pod anti-affinity breaks down

# as soon as you have more than one OSD per node. The topology spread constraints will

# give us an even spread on K8s 1.18 or newer.

placement:

topologySpreadConstraints:

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-osd

tolerations:

- key: storage-node

operator: Exists

preparePlacement:

tolerations:

- key: storage-node

operator: Exists

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: agentpool

operator: In

values:

- storagenp

topologySpreadConstraints:

- maxSkew: 1

# IMPORTANT: If you don't have zone labels, change this to another key such as kubernetes.io/hostname

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-osd-prepare

resources:

limits:

cpu: "500m"

memory: "4Gi"

requests:

cpu: "500m"

memory: "2Gi"

volumeClaimTemplates:

- metadata:

name: data

spec:

resources:

requests:

storage: 100Gi

storageClassName: managed-premium

volumeMode: Block

accessModes:

- ReadWriteOnce

During the cluster provisioning, there will be a number of preparing Pods. We want those Pods to run on nodes with label agentpool=storagenp. In real life, we need to orchestrate where to run each workload, by restricting the nodes to schedule certain types of workload.

Then we can install the cluster using Helm:

helm install rook-ceph-cluster rook-ceph-cluster --namespace rook-ceph --create-namespace --version v1.9.6 --repo https://charts.rook.io/release/ --values rook-ceph-cluster-values.yaml

After running the Helm install, it may take as long as 15 minutes for all resources to settle. Watch the Pod status in rook-ceph namespace. At the end, make sure that the cluster is created successfully:

kubeadmin@pro-sturgeon-bastion-host:~$ kubectl -n rook-ceph get CephCluster

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL

rook-ceph /var/lib/rook 3 15m Ready Cluster created successfully HEALTH_OK

kubeadmin@pro-sturgeon-bastion-host:~$ kubectl -n rook-ceph get cephBlockPools

NAME PHASE

ceph-blockpool Ready

kubeadmin@pro-sturgeon-bastion-host:~$ kubectl -n rook-ceph get cephFileSystems

NAME ACTIVEMDS AGE PHASE

ceph-filesystem 1 20m Ready

In my case it took 15 minutes before the cluster comes up as created successfully. You should notice that two storage classes were also created as a part of the install. It however did not create a storage class or CRD for object storage, because we explicitly disabled it in the Helm value file by setting cephObjectStores value to null.

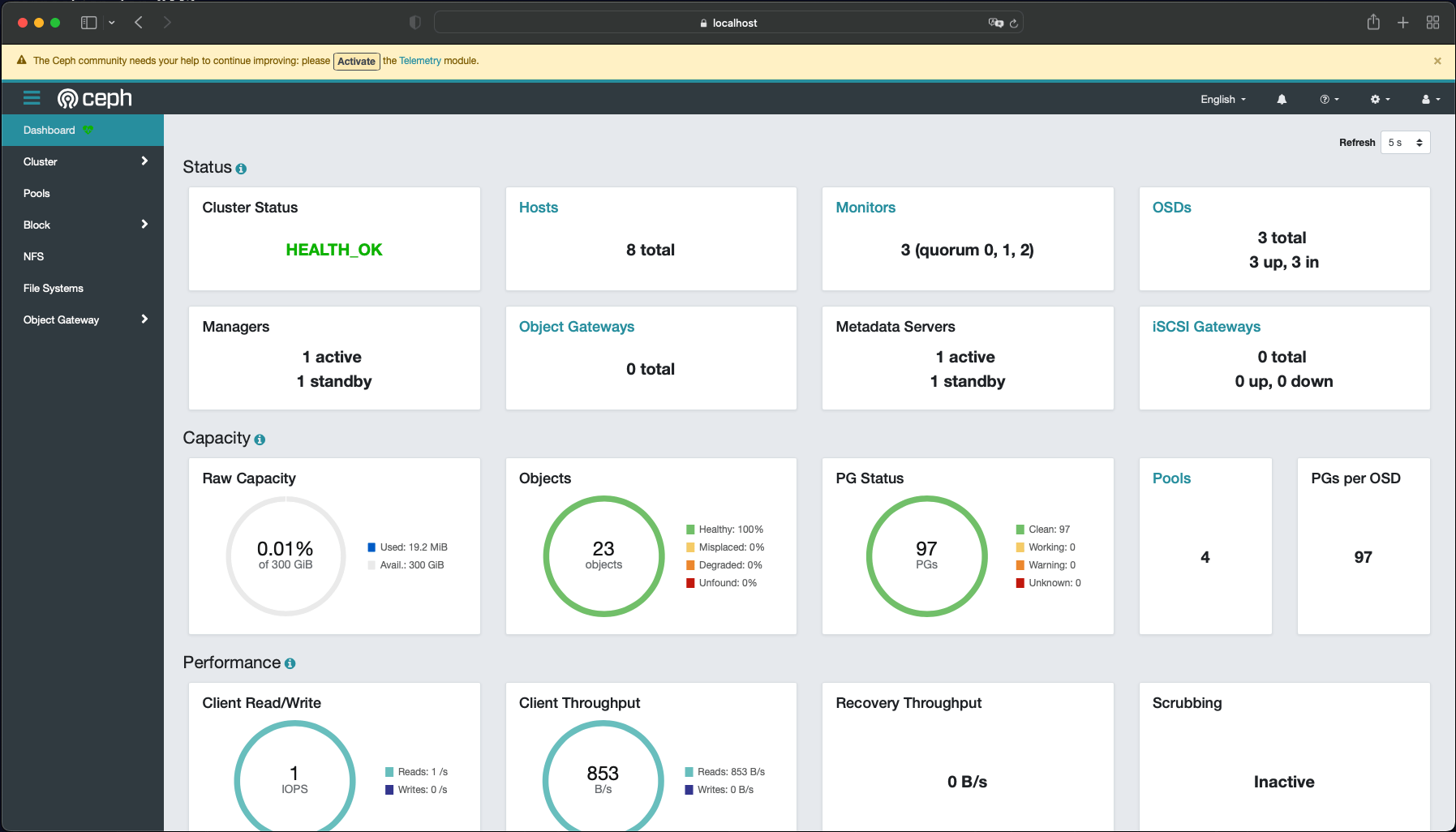

Dashboard

We enabled dashboard. To configure the dashboard view properly, we would need an ingress. For a quick view here, we can play port forwarding tricks. First we fetch the admin password for use in the next step. Then expose the dashboard to the bastion host:

$ kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath='{.data.password}' | base64 -d

$ kubectl -n rook-ceph port-forward svc/rook-ceph-mgr-dashboard 8443:8443

Since I don’t have UI on the bastion host, I use the port forwarding trick again from my own MacBook. Start a new terminal and SSH to the bastion host with port-forwarding switch:

$ ssh -L 8443:localhost:8443 [email protected]

The command above suppose the public IP of the bastion host is 20.116.132.8. Then from my MacBook I can browse to localhost:8443 (with Safari browser which gives me the option to bypass certificate error). At the web portal, provide username (admin) and password (as retrieved above):

Apart from the dashboard, we can also use ceph admin tool from a toolbox pod, following this instruction. For monitoring, Ceph by Rook can expose metrics for Prometheus to scrape.

Performance

With default ceph configuration on AKS, I ran quick performance test using kube-str . The result is as follows:

| read_iops | write_iops | read_bw | write_bw | |

| ceph-block | IOPS=464.507294 BW(KiB/s)=1874 | IOPS=243.296143 BW(KiB/s)=989 | IOPS=509.928162 BW(KiB/s)=65797 | IOPS=248.530762 BW(KiB/s)=32338 |

| ceph-filesystem | IOPS=438.701324 BW(KiB/s)=1770 | IOPS=226.270660 BW(KiB/s)=920 | IOPS=405.936340 BW(KiB/s)=52456 | IOPS=208.869293 BW(KiB/s)=27229 |

The metrics reflects performance under default configuration. It should not be considered as the best performance that Ceph can deliver on Azure Kubernetes.

Summary

I discussed three storage options for Azure Kubernetes but the idea applies to other Kubernetes platform hosted on a CSP. The native storage has significant limitation. NFS has latency. Block storage does not address high availability at the storage layer. Portworx and LINSTOR fill that gap as a commercial solution. Ceph is based on Object storage.