I reviewed some storage technologies on Kubernetes but they are all for block and file storage. In this post, I will discuss the current available options for container workload to use object storage. I will also touch on MinIO as an object storage solution.

Object storage

Block and file system are more native to operating system because they present themselves to the OS as a block device or file system attached to the OS. In other words, application processes running on the OS will be able to access the storage by address expressed as a POSIX-compatible path. On the contrary, object storage is a REST API service, operating at the application layer in the TCP/IP stack. Therefore, we can think of object storage as “storage as a web service”.

Object storage can be made very cheap. However, the application protocol may vary depending on the object storage provider. Amazon S3 is a forerunner in object storage market and its protocol has emerged as the de-facto standard for object storage. When building an application and if there is one object storage protocol to support, it should be S3. For non-S3 object storage services, we can front them with an S3 interface, if the provider itself does not have one. For example Ceph storage has its Gateway S3 API.

Container Object Storage Interface

If we use S3 as the universal object storage protocol, does that also address object storage access with container workload on Kubernetes? Absolutely. Nonetheless, for a number of reasons using REST API from containers are not the best option. From platform’s perspective, it is the platform that should define how to access object storage, instead of leaving it with an application-layer protocol.

When a pattern (for storage, or networking, etc) turns out very common, the platform layer should incorporate it as an infrastructure service, manage it with its own standard, and provide it to application so that developer can focus on business features. With that vision, the community brought up the Container Object Storage Interface (COSI) initiative. It is currently in very early stage, but the idea is to commoditize object storage in Kubernetes platform with a unified interface. For more background about this initiative, refer to the post “Beyond block and file – COSI enables object storage in Kubernetes“.

COSI is the ultimate cloud native solution but it is still in pre-alpha phase as of mid 2022. Unfortunately, it is not a recommended solution to any real-life project in 2022, and we are stuck with the unified API approach until COSI matures.. The unified API approach is by no means cloud native, but has come to maturity for adoption. S3 Rest API is our friend, regardless of whether the client process is in a container or not.

Update: on Sept 2, 2022, Kubernetes introduced COSI as alpha feature.

MinIO Introduction

In order to use S3 protocol without using Amazon S3 storage, we can use MinIO to build our own object storage service serve client via a S3-compatible REST API interface. The main developer of the MinIO project is MinIO Inc, a startup from 2014. Having learned the lessons from GlusterFS, the founders and developers make MinIO very simple. MinIO operates in two modes: gateway mode (soon to be legacy) and server mode.

In the Gateway mode, MinIO as a gateway between client and destination storage, and does not persist data to itself. In the past, the destination storage can be Azure Blob and Google Cloud Storage (GCS) and HDFS as backend. However, these supports are deprecated now. The current release (July 2022) only supports S3 and NAS backend. According to MinIO’s blog post from February 2022, the entire MinIO Gateway feature will be removed in August, leaving server mode the only option for MinIO.

In the Server mode, the MinIO service will persist data to itself in a file system (or volume). You can specify that file system (or volume) as you launch the server. As one of the quick-start guides shows, we can host MinIO server using a single executable. For administrative tasks, MinIO has a web console and a client utility called mc.

MinIO Deployment Options

For storage service, there are a number of deployment options:

- SNSD (single-node, single-drive): single MinIO server with a single storage volume or folder.

- SNMD (signle-node, multi-drive): single MinIO server with four or more storage volumes.

- MNMD (multi-node, multi-drive, aka distributed): multiple MinIO servers with at least four drives across all servers. This should be considered for production grade configuration.

The deployment options above describes the node and volume topology. No matter which topology option, there are also a number of ways to host the MinIO service process: on Linux OS, Windows OS, MacOS, Docker Container, and on Kubernetes platform.

In addition, MinIO Inc ships the software under different business models. For example, there are fully managed applications in Azure Marketplace, AWS Marketplace, and GCP Marketplace all hosted on virtual machines with extra charges. Clients not willing to pay can host MinIO storage all on their own, either on virtual machines, or on managed Kubernetes environment provided by each cloud provider.

MinIO Hosting solutions

MinIO lists these hosting solutions under multi-cloud products. These hosting solutions (or “products” in MinIO’s term) vary in terms of where peripheral services and data tiers are hosted. Here is the list of the supported platforms:

To illustrate how these solutions are different, I put some details on a few options together for an incomplete comparison below:

| Kubernetes | EKS | AKS | GKE | |

| Hot Storage | Direct PV (NVMe) | EKS EBS CSI | Azure CSI | GKE Standard SSD |

| Warm Storage | Direct PV (HDD) | S3 IA | Azure BlobStore | GCS |

| Cold Storage | Public Cloud storage | Glacier | Azure Cool Blob | GCS for Data Archiving |

| Encryption | HashiCorp Vault | KMS | Azure Key Vault | Cloud Key Management |

| Observability | Elastic Stack and Grafana | Managed ElasticSearch Prometheus | Azure Monitor | Stack Driver |

| Identity Provider | KeyCloak | LDAP, SSO | Azure Active Directory | GCP Cloud Identity |

| LB and Cert Mgmt | Nginx, Let’s Entrypt | AWS Cert Mgr, ELB | Azure Load Balancer, JetStack, Let’s Encrypt | GCP Cloud LB and Managed Cert |

Note that all of these hosting solutions are based on some flavour of Kubernetes. The hot tier is usually based on storage options available to the platform. MinIO service access this hot tier via Kubernetes persistent volume. The warm and cold tiers are backed by different object storage service. Between MinIO and storage client, it always use the same S3 compatible Rest API.

MinIO also has tiering capability. While the hot storage destination has to be either a file system or Kubernetes persistent volume, remote tiers can be S3 , Azure Blob, or GCS. MinIO supports encryption at rest (SSE-KMS, SSE-S3, SSE-C) and in transit (TLS) for security, as well as many other useful features such as object replication, versioning, locking, events, Prometheus metrics, lifecycle management etc.

Connect to MinIO server with S3 client

To validate that the client is compatible, we use MinIO’s client utility (mc) to connect to an AWS S3 bucket. Then we use AWS CLI to connect to a MinIO server, similar to this instruction. To do so, we first install client and server utilities:

brew install minio/stable/minio

brew install minio/stable/mc

minio --version

mc --version

Then, we start MinIO server and store an object using AWS CLI’s S3 tool. In our working directory, we create a new directory called minio_data and launch MinIO server with it:

mkdir minio_data

minio server minio_data --console-address :9090

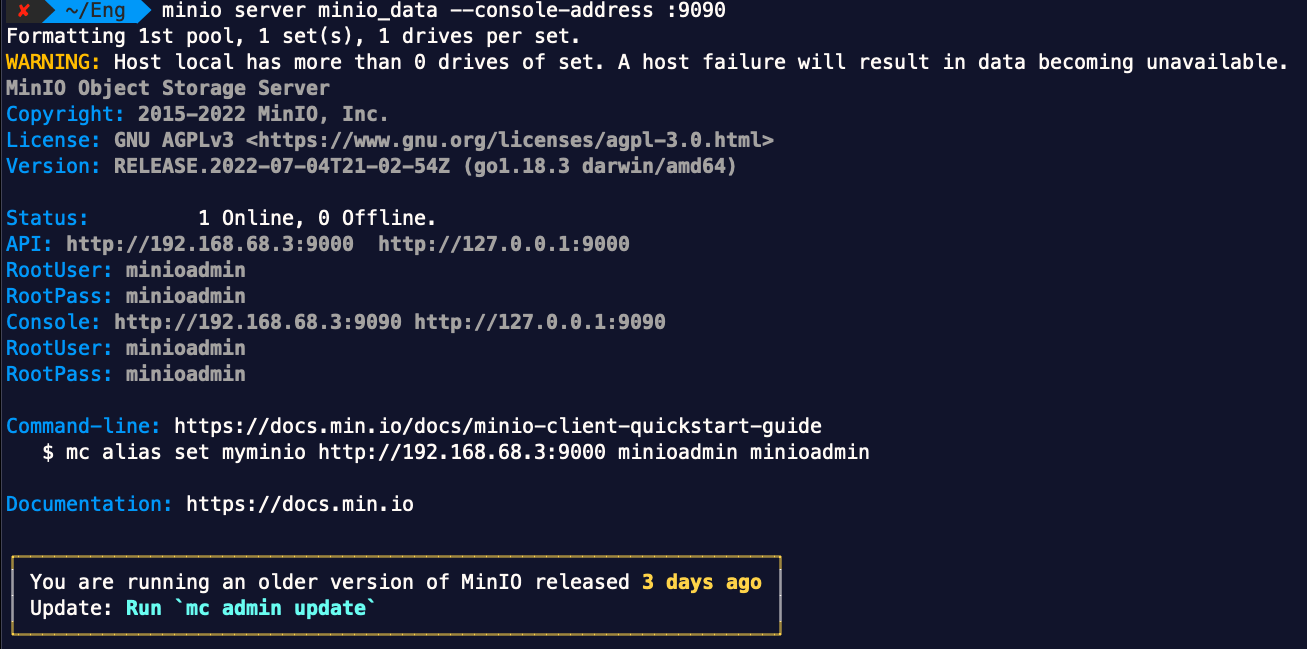

Once the server is up, the screen should display the details, including the portal URL and the default username and password will be used as Access Key ID and Secret Key:

Note that the MinIO service does NOT have TLS enabled by default, on the console or API service. At this point, we can browse to the console web page using the given credential. Then, we can configure AWS CLI with a new profile just to act as a client to communicate with the MinIO server:

$ aws configure --profile minio-cli

AWS Access Key ID [None]: minioadmin

AWS Secret Access Key [None]: minioadmin

Default region name [None]: us-east-1

Default output format [None]: json

$ aws configure set default.s3.signature_version s3v4 --profile minio-cli

At this point, the AWS CLI is configured to communicate with MinIO server. Then, we can create bucket, list object in the bucket, copy an object to the bucket, etc

$ aws --endpoint-url http://127.0.0.1:9000 s3 ls --profile minio-cli # list all bucket, should return empty

$ aws --endpoint-url http://127.0.0.1:9000 s3 mb s3://hehebucket --profile minio-cli # create new bucket

make_bucket: hehebucket

$ aws --endpoint-url http://127.0.0.1:9000 s3 cp README.md s3://hehebucket --profile minio-cli # copy a file to bucket as a new object

upload: ./README.md to s3://hehebucket/README.md

$ aws --endpoint-url http://127.0.0.1:9000 s3 ls s3://hehebucket --profile minio-cli # list objects in the bucket

2022-07-09 00:30:23 631 README.md

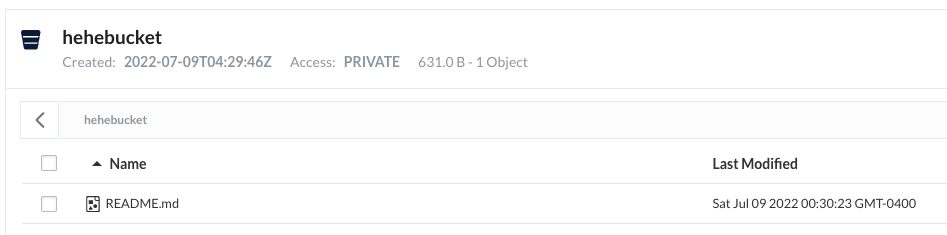

The created bucket and object are also visible in MinIO web console, under “Bucket”:

The steps above validate that AWS CLI can talk to MinIO server. Because of that, MinIO server can emulate an S3 service in any development environment so users do not always have to use S3 from AWS. This makes sense for both cost and security reasons for the organization.

Connect to S3 with MinIO client

In this lab, we create an S3 bucket and use mc utility to store an object to it. In order to consistently create S3 bucket and associated permissions, I use the CloudFormation template in this repo. The output of the CloudFormation stack returns the Access Key ID and Secret Key required for the client to access the bucket. Once we cloned the repo, let’s enter the obj-store-helper directory, and run aws cli command to launch the CloudFormation template, assuming it has been configured:

BUCKET_NAME=c0sas2dsadigihunch

S3_STACK_NAME=$BUCKET_NAME-stack

aws cloudformation create-stack --template-body file://aws-s3-stack.yaml --stack-name $S3_STACK_NAME --parameters ParameterKey=S3BucketName,ParameterValue=$BUCKET_NAME --capabilities CAPABILITY_IAM

# to delete stack after test, run: aws cloudformation delete-stack --stack-name $S3_STACK_NAME

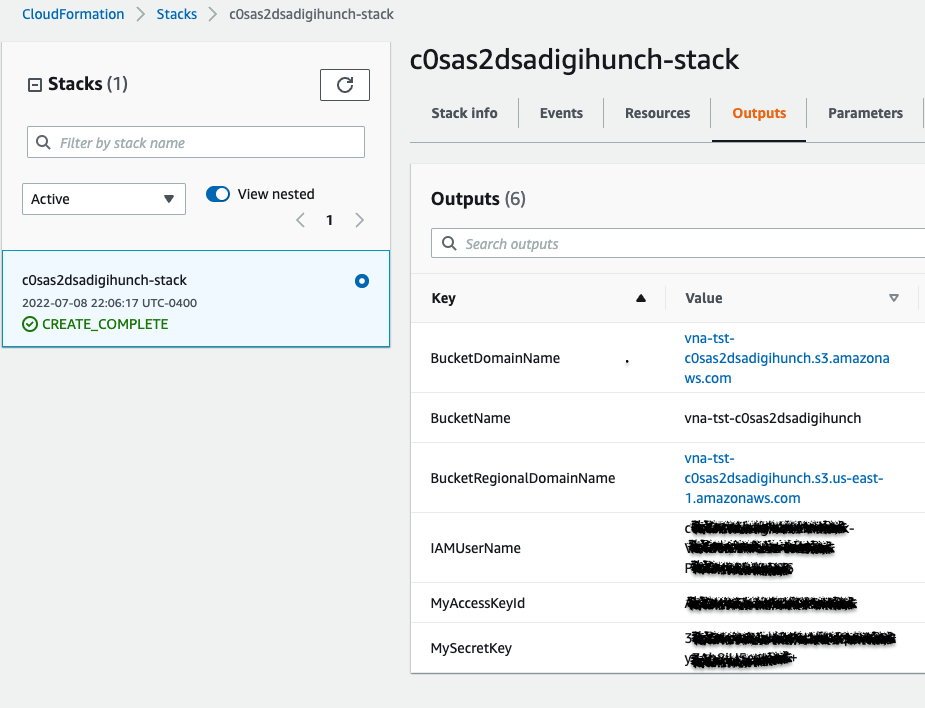

In the AWS console, we should see the configuration information as below:

Supposed the bucket name is vna-tst-c0sas2dsadigihunch as shown above, this allows us to configure the client utility MC as below:

mc alias set awss3 https://s3.amazonaws.com # Fill in access key ID and Secret key at the prompt

mc ls awss3/vna-tst-c0sas2dsadigihunch # list objects in the bucket, should return empty

mc cp README.md awss3/vna-tst-c0sas2dsadigihunch/README.md # upload and object to bucket

mc ls awss3/vna-tst-c0sas2dsadigihunch # list objects in the bucket, the uploaded object should be there

mc rm awss3/vna-tst-c0sas2dsadigihunch/README.md # delete the object

mc alias remove awss3 # remove awss3 alias

Once we emptied the bucket, we can delete the CloudFormation stack. This test only needs client utility mc to verify that MinIO client is able to talk to AWS S3 server.

Erasure Coding

For scalable production use, we should deploy MinIO in distributed mode. When MinIO is configured in distributed deployment (MNMD, or multi-node, multi-drive), it implicitly enables an important feature called erasure coding. This erasure coding feature further unlocks a number of other MinIO features:

Erasure coding is MinIO’s data redundancy and availability feature that allows MinIO deployments to automatically reconstruct objects on-the-fly despite the loss of multiple drives or nodes in the cluster. Erasure coding provides object-level handling with less overhead than adjacent technologies such as RAID. The key concept is Erasure Set, a set of drives in a MinIO deployment that supports Erasure Coding. MinIO evenly distributes object data and parity blocks among the drives in the Erasure Set.

Two important variables are M and N: for a given erasure set of size M, MinIO splits objects into N parity blocks, and M-N data blocks. MinIO uses the EC:N notation to refer to the number of parity blocks (N) in the deployment. To determine optimal erasure set size for the cluster, use MinIO’s Erasure Coding Calculator tool.

To help client to specify per-object parity with Erasure Coding, MinIO uses storage classes. Note that the storage class concept in MinIO is distinct from AWS S3 storage class or Kubernetes storage class. In MinIO, a storage class defines parity settings per object. The STANDARD storage class (default) defines EC:N based on M, which can be overridden. In addition, there is REDUCED_REDUNDANCY storage class, whose parity must be less than or equal to that of STANDARD storage class.

The erasure coded backend also protects the storage against Bit Rot with HighwayHash algorithm.

More Features

Authentication and authorization between MinIO client and MinIO server have a number of options. MinIO client may use the built-in standalone identity management in MinIO server. This is the default mode. In addition, one may delegate IAM to external service. To Active Directory via LDAP, or any Identity provider that supports OIDC (JWT with Authorization Code Flow).

As to Object Lifecycle Management (OLM), MinIO allows you to define a remote tier storage for each local target (bucket). The remote tier can be Amazon S3, Google Cloud Storage or Azure Blob storage. We can use mc utility to administer the remote tier and OLM. Configuration steps (e.g. Azure Blob, AWS S3) usually include:

- Configure required permissions on the MinIO bucket, create user account for OLM activities.

- Configure the Remote Storage Tier

- Create and Apply an ILM Transition Rule. The rule can be expressed in a json document.

- Validate the creation of ILM transition rule

- Validate the effect of transition rule.

As for encryption, MinIO can support encryption at rest. It can also work with etcd store to store encrypted IAM assets if KMS is configured.

Conclusion

Even though we watch for the progress of COSI initiative, we still use Rest API to access object storage from container, which is no different than from a virtual machine. If we develop an application, then we should make it support S3 protocol, a de-facto standard protocol for object storage. As for the storage backend, if we want to be vendor neutral, the feature-rich MinIO is the best bet. We can use MinIO to build our own Object storage as a service compatible with S3. We can also lifecycle our object to remote object storage tier backed by Azure, GCP or S3. In this post we validated the S3 compatibility, and discussed some advanced MinIO features.