Kubernetes networking involves a lot of details. We discuss some CNI plugins in this post.

The most basic mode is kubenet. We use –network-plugin=kubenet with kubelet process to use it. Kubenet is not a CNI plugin, but it works with bridge, lo and host-local (CNI-compliant implementations). We can directly specify MTU with –network-plugin-mtu. Kubenet is a basic network plugin, based on bridge plugin, with the addition of port mapping and traffic shaping. It does not offer cross-node networking itself. Today it is typically used with managed clusters by cloud providers, where the cloud provider set up routing rules themselves for inter-node communication.

When a cluster goes multi-node, the main challenge is communication between Pods across different nodes. Pods come and go. The size of cluster could increase or decrease as well. The network solutions come in two network types: overlay network based on encapsulation, or non-overlay networks, most likely using routing techniques. Common backends for for multi-host container networking solutions include VXLAN encapsulation, IPIP encapsulation, host-gw, IPSec. In addition, there are some backends that only used by certain plugins.

Common Backends

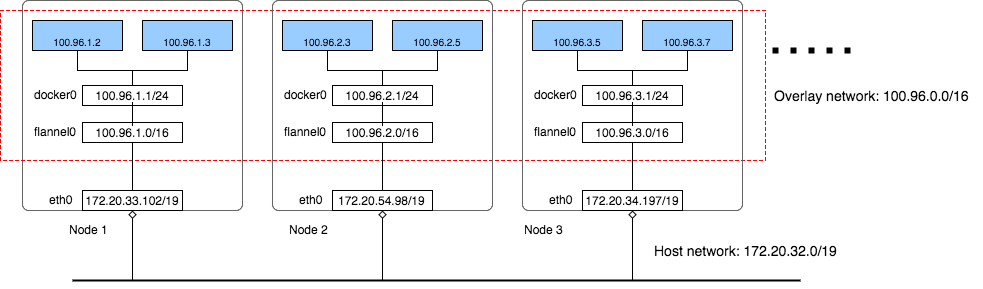

VXLAN: use in-kernel VXLAN to encapsulate the packets. VXLAN is a virtual networking capability in Linux which is also used in virtualization technology. VXLAN is an overlay technology requiring encapsulation of overlay network’s layer-2 frame into UDP packet at layer 4 of underlay network. When configured, the VxLAN backend creates a Flannel interface on every host. When a container on one node wishes to send traffic to a different node, the packet goes from the container to the bridge interface in the host’s network namespace. From there the bridge forwards it to the Flannel inteface because the kernel route table designates that this interface is the target for the non-local portion of the overlay network. The Flannel network drive wraps the packet in a UDP packet and sends it to the target host. Once it arrives at its destination, the process flows in reverse, with the Flannel driver on the destination host unwrapping the packet, sending it to the bridge interface, and from there the packet find its way into the overlay network and to the destination Pod.

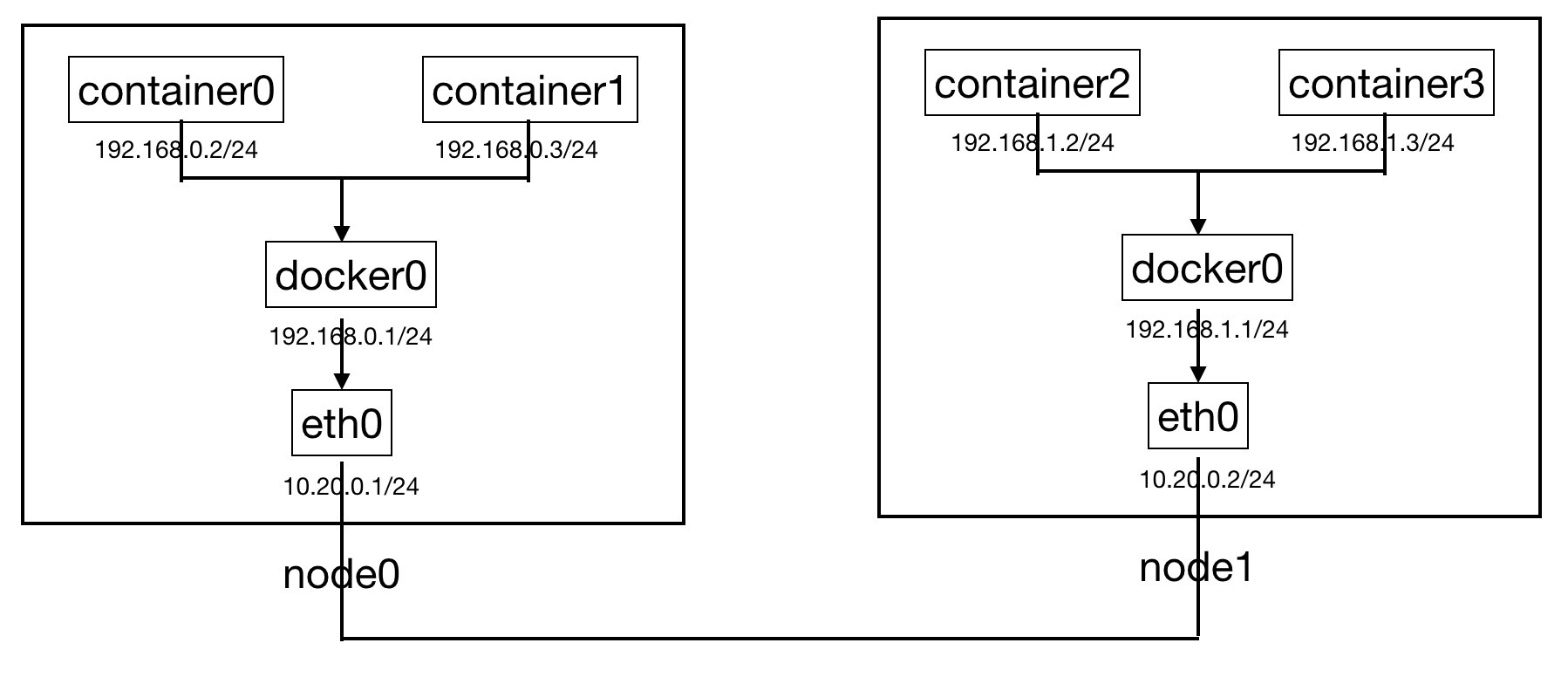

host-gw: the host-gw is a non-overlay solution that maintains route tables on Linux Host to allow Pods to communicate across Nodes. It is only used in Flannel plugin. Suppose we have two hosts, each with two containers as connected below. Initially, container0 is not able to reach container2 because eth0 on node0 does not have an entry that matches container2’s IP address. The packet is there sent to default route, which isn’t destined to container2.

However, if we build rules to match container IP address, on the route table of each node. The issue would be solved. This is essentially how host-gw works. Specifically, on node 0, we add “ip route add 192.168.1.0/24 via 10.20.0.2 dev eth0”, on node 1, we add “ip route add 192.168.0.0/24 via 10.20.0.1 dev eth0). The host-gw in Flannel will manage rule addition to us. Note that the two hosts must have direct layer 2 connectivity. In other words, there must not be a router between the two nodes. Otherwise, the routing table on the router is out of reach. In fact, all nodes in a Flannel network must have layer 2 connectivity with each other. In other words, all nodes must be in a single LAN. Host-gw provides better performance than VxLAN.

IPSec uses in-kernel IPSec to encapsulate and encrypt the packets. IPsec is a group of protocols to ensure authentication and encryption per packet between devices. Since it secures traffic at layer 3 and now it has become a major backend technology for VPN. IPsec adds several headers and trailers to datagram containing authentication and encryption information. The two major protocols working in IPSec are AH (Authentication Header) and ESP (Encapsulating Security Payload). AH serves up authentication services only; ESP provides both authentication and encryption abilities. It also uses IKE protocol for key exchange.

IPSec works in two modes: transport and tunnelling mode.

- Transport mode creates a secure tunnel between two devices end to end. The payload of each datagram is encrypted, but the original IP header is not. Intermediary routers are thus able to view the final destination of each datagram, unless a separate tunnelling protocol (e.g. GRE) is used.

- Tunnel mode works between two endpoints, such as two routers, protecting all traffic that goes through the tunnel. The original IP header containing the final destination of the datagram is encrypted, in addition to the payload. To tell intermediary routers where to forward the datagrams, IPsec adds a new IP header. At each end of the tunnel, the routers decrypt the IP headers to deliver the datagram to their destinations. The intermediary routers does not know the final destination, or what transport protocol is used.

IPIP (IP over IP) tunnel is typically used to connect two internal IPv4 subnets through public IPv4 internete. It has the lowest overhead but can only transmit IPv4 unicast traffic.

CNI Plugins

Originally, the network functions were developed in-tree. Then the CNI specification came up to allow plugin development out-of-tree to implement cluster networking functions. The Container Network Interface seeks to completely decoupled network management from container runtime. Kubernetes picked CNI over CNM in 2016, as discussed in my virtualization discussion. CNI clearly defines the specification for following activities:

- When a Pod comes up, give it a network interface

- Assign IP to the network interface

- When a Pod is deleted, delete the associated network interface

When we configure a Kubernetes cluster, we must specify –network-plugin switch, so that the cluster is operational. If we use CNI as network-plugin, we also need to install the plugin, optionally with the help of Rancher.

On the worker node, we use –network-plugin=cni with kubelet process to use CNI plugins. A plugin may consist one or more binaries. The binaries are located in /opt/cni/bin (or otherwise specified by –cni-bin-dir). The configurations are located in /etc/cni/net.d (or otherwise specified in –cni-conf-dir). Note that the configuration file may reference different plugin implementations for different network management purpose (e.g. interface creating, address allocation, etc). The container networking repo provided some reference implementations and some of them are used by other plugins. These reference implementations include:

- Main (interface creating): bridge, ipvlan, loopback, ptp, macvlan, etc

- IPAM (IP address management): host-local, dhcp, static

- Meta (other plugins): portmap, bandwidth

So, a CNI plugin consists of a networking solution for backend, and binaries to cover the aspects outlined above. I discussed some common backends above. Below I will introduce some common plugins and backends only available to each plugin

Flannel

Flannel by CoreOS: supports a range of backends. The advantage of Flannel is it reduces the complexity of doing port mapping. This is a great post that covers the mechanism.

It supports VXLAN, host-gw, IPSec, IPIP as well as the followings:

- Amazon VPC: recommended with Amazon VPC. AWS VPC creates IP routes in an AWS route table. The number of records in this table is limited by 50 so you can’t have more than 50 machines in a cluster.

- GCE: recommended with Google Compute Engine Network. Instead of using encapsulation, GCE also manipulates IP route to achieve maximum performance. Because of this, a separate flannel interface is not created.

- UDP: debugging only for old kernels that don’t support VXLAN

Calico

Border Gateway Protocol (BGP) is a standardized exterior gateway protocol designed to exchange routing and reachability information among autonomous systems (AS) on the Internet.

Calico operates at layer 3. It prefers BGP without an overlay network for the highest speed and efficiency, but in scenarios where hosts cannot directly communicate with one another, it can utilize an overlay solution (e.g. VxLAN or IP-in-IP). Calico also supports network policies for protecting workloads and nodes from malicious activity or aberrant applications.

The Calico networking Pod contains a CNI container to keep track of Pod deployment, and register addresses and routes. It also contains a daemon that announces the IP and route information to the network via the Border Gateway Protocol (BGP). The BGP daemon build a map of the network that enables cross-host communication.

Calico requires a distributed and fault-tolerant key/value store, and deployments often choose etcd to deliver this component. Calico uses it to store metadata about routes, virtual interfaces, and entwork policy objects. Calico can either use a separate HA deployment of etcd, or the same etcd datastore with the Kubernetes cluster.

When we are unable to use BGP (e.g. with cloud provider, or in an environment where we have no permission to configure router peers. Calico’s IP-in-IP mode encapsulates packets before sending them to other nodes.

Once IP-in-IP is configured, Calico wraps inter-Pod packets in a new packet with headers that indicate the source of the packet is the host with the originating Pod, and the target of the packet is the host with the destination Pod. The Linux kernel performs this encapsulation, and then forwards the packet to the destination host where it is unwrapped and delivered to the destination Pod.

Canal

The followings is quoted from Rancher website:

Canal seeks to integrate the networking layer provided by Flannel with the networking policy capabilities of Calico. As the contributors worked through the details however, it became apparent that a full integration was not necessarily needed if work was done on both projects to ensure standardization and flexibility. As a result, the official project became somewhat defunct, but the intended ability to deploy the two technology together was achieved. For this reason, it’s still sometimes easiest to refer to the combination as “Canal” even if the project no longer exists.

Because Canal is a combination of Flannel and Calico, its benefits are also at the intersection of these two technologies. The networking layer is the simple overlay provided by Flannel that works across many different deployment environments without much additional configuration. The network policy capabilities layered on top supplement the base network with Calico’s powerful networking rule evaluation to provide additional security and control.

Weave Net

Weave Net by Weaveworks offers a different paradigm. Weave creates a mesh overlay network between each of the nodes in the cluster, allowing for flexible routing between participants. Applications use the network just as if the containers were all plugged into the same network switch, with no need to configure port mappings and links.

For more good references to determine networking options, check out these posts: