Managed Kubernetes services give user a cluster endpoint and a number of worker nodes, with the choice. For each access, users have the choice of making them publicly available, or keeping them on private networking. In my opinion, any deployment beyond personal hobbies, should use Kubernetes private cluster, with both cluster endpoint and worker nodes on private subnet. There is no reason to expose computing nodes or Kubernetes management traffic publicly.

For worker nodes, it is fairly easy to put VMs on private network, but many companies still have the cluster endpoint exposed publicly. There are usually two reasons. First, their CI/CD agent is hosted somewhere else on the Internet (instead of on private network with private connectivity to Kubernetes cluster) and need to access Kubernetes cluster endpoint. Second, when the cluster needs to connect with third-party identity provider as OIDC provider, a two-way communication is needed.

There is a classic pattern of using a public bastion host (jump box), with a bastion host on the public subnet, routable to the private endpoint of managed Kubernetes service. Clients then connect to the bastion host via port 22 on a public IP address. The authentication is based on SSH key pair, or worse, password. The port forwarding (aka SSH tunnelling) capability enables all the magics.

Exposing a jump box in the public subnet with RSA key authentication is still not favourable. In this post, I’ll examine some secure patterns to connect to private endpoint with improved security posture.

AWS options

There are two problems. First, how to establish connectivity to the Bastion host in a private subnet. Second, how to use the Bastion host to proxy traffic to the cluster endpoint also in private subnet. To the first problem, there are two potential solutions: SSM Session Manager, and EC2 Instance Connect (EIC) with EIC endpoint (EICE).

SSM Session Manager was introduce in 2018. It runs an agent on the EC2, which initiates a connection to the SSM endpoint on the AWS side. This connection enables not only Session Manager, but also other Systems Managers (SSM) services such as Fleet Manager, Patch Manager and State Manager. The problem that session manager originally addresses is server management.

AWS launched EC2 Instance Connect (EIC) in 2019, and EIC Endpoint (EICE) in 2023. EIC addresses the problem with managing SSH key pairs at scale. It dynamically generates an SSH key pair for server access, based on IAM permission. However, it still requires an instance to have its SSH port publicly accessible. With EICE, it is no longer a requirement. In the diagram, EICE is placed in a private subnet, allowing EICE service to reach private instances at their SSH port.

Here is a comparison of the two:

| EC2 Instance Connect (EIC) with EIC Endpoint | SSM Session Manager | |

|---|---|---|

| Location of Bastion host | Private Subnet. | Private Subnet |

| Need Ingress Port | Yes. Port 22 must open to the endpoint. | No. SSM agent initiate outbound connection from the instance |

| Traffic Path | AWS CLI → AWS EIC ES → EICE→EC2 Inst | AWS CLI → AWS SSM ES → SSM ← EC2 Inst |

| Authentication | AWS IAM and ephemeral SSH key when using AWS CLI directly AWS IAM and long-term SSH key when using SSH proxy command | AWS IAM and long-term SSH key when using AWS CLI directly or SSH proxy command |

| Work with OpenSSH | Yes | Yes |

| Cost | There is no additional cost for using EIC. | No additional cost, unless private SSM Endpoint. |

Let’s take a look at each option.

EC2 Instance Connect

To use EIC, pick an AMI that has it pre-installed and ensure instance profile has correct policy, as the document states here. AWC CLI will make use of local OpenSSL client. So make sure there connection at port 22 is open. To make it work with EC2 instance on a private subnet, create an EC2 Instance Connect Endpoint on the VPC, and ensure that the security group of EC2 allows port 22 from the Endpoint. Run this command:

$ aws ec2-instance-connect ssh --instance-id i-00ea30a6e02db33fe

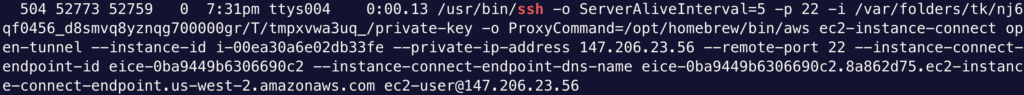

The command above simply generates a key pair internally, add the public key to the server side, and connect with SSH from the client side. The command takes you to an SSH session. Checking ps -ef | grep ssh on the client machine, you can see the full parameter of SSH, including the location of the ephemeral private key.

However, if you use AWS CLI open-tunnel as proxy command to ssh, then you’d still have to use the key pair used to create the EC2 instance. As suggested at the bottom of this blog post, the command is:

$ ssh ec2-user@[INSTANCE] -i [SSH-KEY] -o ProxyCommand='aws ec2-instance-connect open-tunnel --instance-id %h'

This is a bummer, because with native SSH tool you do not get the primary benefit of EIC – ephemeral key pair.

SSM Session Manager

Now let’s look at SSM session manager. Similarly, it needs an agent installed and IAM role configured. You can connect to from web console but more importantly, from AWS CLI:

$ aws ssm start-session --target i-0531b19bec8ad022d

This command takes you to an SSH session with user ssm-user, without starting an OpenSSH client process locally. User do not have to manage key pair. There is also a document about using this command as proxy command, which uses an SSM document. I have one of the SSH config entry as:

host i-* mi-*

ProxyCommand sh -c "aws ssm start-session --target %h --document-name AWS-StartSSHSession --parameters 'portNumber=%p'"

User ec2-user

IdentityFile ~/.ssh/id_rsa

This allows me to directly SSH to client using OpenSSL client (e.g. ssh i-0531b19bec8ad022d) by Instance ID. With this, I also need to specify my own OS user and matching private key.

I know I will use the OpenSSH client a lot from pipelines because it is very powerful. In both options, I have to live with managing key pairs myself. With SSM session manager’s proxy command, the instance does not need port 22 to open, which is a great advantage, in terms of security and operation. SSM Session Manager is a winner.

SOCKS5 proxy for kubectl

Either SSM Session Manager or EIC with EICE enables an SSH tunnel with key encryption between client (a local computer or a pipeline agent). On top of the SSH tunnel, we can build a SOCKS5 proxy. Kubernetes document has a good page on how to do this. I managed to get this to work with a few gotchas.

To put this in practice, I first created a VPC stack with a bastion host using terraform template from my vpc-base project. The terraform output will give the next set of commands to run to create a private cluster, using a manifest rendered from the file private-cluster.yaml.tmpl:

# cd aws_vpc

# terraform init

# terraform plan

# terraform apply

# ... run the given command ...

# envsubst < private-cluster.yaml.tmpl | tee | eksctl create cluster -f -

# Run this from a remote host without access to cluster endpoint.

# Run terraform apply and terraform output contains the variables needed for the next steps

# the command below may take 15 minutes to create a private cluster

eksctl create cluster -f private-cluster.yaml

aws eks update-kubeconfig --name private-cluster

At this point, the kubeconfig file has been updated, but kubectl (from Internet or on-prem) is unable to connect to cluster endpoint (on private network). In order to

BASTION_SECURITY_GROUP_ID=$(terraform output -raw bastion_sg_id)

CLUSTER_SECURITY_GROUP_ID=$(aws eks describe-cluster --name private-cluster --query "cluster.resourcesVpcConfig.clusterSecurityGroupId" --output text)

# In Cluster Endpoint's security group, open up port 443 to Bastion host

aws ec2 authorize-security-group-ingress --group-id $CLUSTER_SECURITY_GROUP_ID --source-group $BASTION_SECURITY_GROUP_ID --protocol tcp --port 443

# Test with connecting to Bastion host with ssh i-0750643179667a5b6, assuming .ssh/config file is configured as above. From the bastion host, you can test:

# curl -k https://EC5405EE1846F19F9F61ED28FB12A6A9.sk1.us-west-2.eks.amazonaws.com/api

# if you get an HTTP response, even an error code 403, the bastion host has TCP connectivity to cluster endpoint

# then we can start an SSH session as a SOCKS5 proxy on the remote host

ssh -D 1080 -q -N i-0750643179667a5b6

# add > /dev/null 2>&1 & to push it to background, or use ctrl+z after running the command

# to validate that the SOCKS5 proxy is working, you can run the same curl command with a proxy parameter:

# curl -k https://EC5405EE1846F19F9F61ED28FB12A6A9.sk1.us-west-2.eks.amazonaws.com/api --proxy socks5://localhost:1080

# you can instruct kubectl to use the SOCKS5 proxy with the following environment variable

export HTTPS_PROXY=socks5://localhost:1080

kubectl get node

# alternatively, add "proxy-url: socks5://localhost:1080" below server attribute in ~/.kube/config file.

There are some pitfalls to watch for. On the remote host both ssh command and kubectl command implicitly uses AWS CLI. Therefore, make sure the profile and IAM role are correctly configured. For example, if SSM agent requires one IAM role, and kubectl is created with another IAM role, then make sure AWS CLI assumes the correct IAM role using environment variables, and use “aws sts get-caller-identity” to validate the IAM identity being used.

What about AKS in Azure

I touched on this in my Azure notes in 2021 and did a research again. Unfortunately, options are still fairly limited.

The first option is to use a managed service called “Azure Bastion”, which requires public IP and a dedicated subnet with the exact name of AzureBastionSubnet, as well as some additional requirement. I’m not impressed with these requirement because it is meant to be a managed service. The other option, is essentially to DIY a JumpBox. The idea is the same: put the jumpbox in a public subnet, which is routable to private subnets. When you need to connect to private VMs, get to the jumpbox first.

Apart from having to put the bastion VM on a public subnet, the pattern that we discussed above involving SOCKS5 proxy still works. Exposing a bastion host isn’t ideal but it still reduces attack surface significantly, comparing to exposing the cluster endpoints of all Kubernetes API servers.

Summary

Many immature Kubernetes configurations exposes private endpoint publicly. Having cluster endpoint in private subnet greatly improves security posture. In my opinion, there are very few situations where cluster endpoint must exposed publicly. Having private endpoint should be mandatory for all Kubernetes cluster. In the next post, I also cover how to create a ROSA cluster with private endpoint.