The most common server CPU architectures today are amd64 (aka x86_64) and arm64. Although AMD developed the former first, Intel names it as x86_64 (or x64 for short). In terms of compatibility, they are the same. In general, arm64 architecture consumes less power and therefore mobile systems first favour it. Its power efficiency now drives a trend towards computing infrastructure. For example, Apple’s MacBook moved to M1 processor in 2020. Since 2018, Amazon’s Graviton processor has entered the third generation. In 2022, Azure also brought Ampere Altra processor, and GCP introduced ARM based VMs. Less power consumption ultimately leads to less computing cost.

I can only see more workloads gradually move to servers with ARM architecture. With Kubernetes, we will most likely have a fleet of computing node consisting of hybrid CPU architectures. We can take a look at what the arm64 adoption entails for workloads on Kubernetes.

ARM64 architecture

Graviton processor is on 64-bit Arm Neoverse cores, targeting for optimizing cloud-native workloads. Currently at AWS, the majority of arm64 instances use Graviton2 processor. This AWS blog posted the news about Graviton3-based general purpose (m7g) and memory-optimized (r7g) EC2 instances. At the bottom, there is a chart that compares the performance of Graviton3 with Graviton2, x86 and M6g instances. We can expect that in a few months the services that supports Graviton2 processor to start supporting Graviton3 processor.

In the serverless landscape, you can specify CPU architecture for Lambda function. If your runtime supports arm64 architecture, you enjoy up to 34% price performance improvement according to this post. In late 2021, AWS Fargate for ECS also started to support Graviton2 Processor with arm64 workload. As to Fargate for EKS, it has not supported Graviton2 processor as of yet, but is on track.

As to Kubernetes, I’ve discussed how to get container registries to support platform-specific images. So we can assume image registries all support OCI format image index(aka fat manifest), which points platform-specific images for arm64 and amd64. In this post, I’ll focus on the node and workload, with EKS as an example. Since control plane is a managed service, we will focus on the worker node, where the Pods are running.

Worker Node

The cloudkube project uses Terraform to build our test EKS cluster. One of the node groups consists of the new m7g.large instance (Graviton3 processor). For this new node group, the AMI type must be AL2_ARM_64, so it picks up an EKS optimized AMI for arm64 during node provisioning. The IAM role of each node has SSM policy so we can use session manager and pre-installed SSM agents to connect to each node. One the m7g node, I would like to check a few things:

- The node CPU

- The containerd package

- The kubelet executable.

They all should be for the right CPU architecture, as the following commands clarifies:

$ lscpu | grep -i arch

Architecture: aarch64

$ yum list | grep containerd

containerd.aarch64 1.6.6-1.amzn2.0.2 @amzn2extra-docker

containerd-stress.aarch64 1.6.8-1.amzn2.0.1 amzn2extra-docker

$ file -b $(which kubelet)

ELF 64-bit LSB executable, ARM aarch64, version 1 (SYSV), dynamically linked (uses shared libs), BuildID[sha1]=5c7a059f13f8bece4ce30f3357d57631c28bdde2, for GNU/Linux 3.7.0, stripped

We can also check image pulling with containerd. Let’s check what is the correct image first, by examining the image index with manifest-tool:

$ manifest-tool inspect digihunch/colorapp:v0.1

The index in the response tells us that:

- the digest of the whole image index starts with 0fa335;

- the manifest digest for the arm64 variant starts with 7479df;

- the manifest digest for the amd64 variant start with 1bd198;

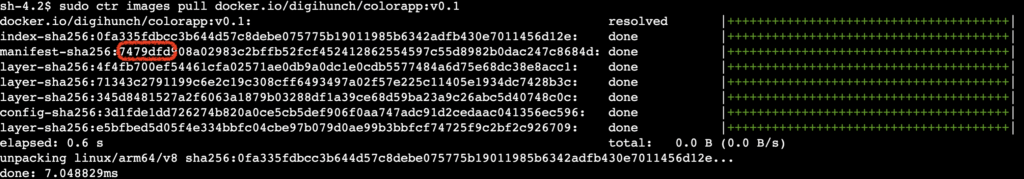

Now if we use ctr utility to pull image (“sudo ctl pull image”), we can see the correct digest for arm64:

The default behaviour of “ctr image pull” is to pull a platform-specific image, overridable with --platform or --all-platforms. On an amd64 node, I get the corresponding results as well.

Workload

Let’s ensure all workloads in the Namespaces are using correct images. We’ll deploy the colorapp, and then examine that along with some system Pods.

For example, DaemonSet aws-node has one pod per node. To verify the distribution, we can get to pods’ command shell and check CPU architecture with uname command:

$ kubectl -n kube-system get po -l app.kubernetes.io/name=aws-node -o name | xargs -I{} kubectl -n kube-system -c aws-node exec {} -- uname -m

The command above verifies that pods scheduled to arm64 nodes correctly. It does not however, proof that the arm64-specific image is being used. I find it pretty tricky to validate a container is using intended image on arm64 node. I have not found a working kubectl command. There is a plausible tag named imageID under container status. For kube-proxy Pod in kube-system namespace, there are two values. However, for colorapp pods, there is only one value with different format, even though they are scheduled to nodes of both architectures.

$ kubectl -n kube-system get po -l k8s-app=kube-proxy -o yaml | grep 'imageID:' | sort | uniq

imageID: sha256:04beb3b811d345722d689a70a30bafa27e0edd412613bee76c3648b024b25744

imageID: sha256:b9b6705d4ad6be861f0e98b7325e5106715ef21a82692f7e8a005a280f159518

$ kubectl -n default get po -l app=color -o yaml | grep 'imageID:' | sort | uniq

imageID: docker.io/digihunch/colorapp@sha256:0fa335fdbcc3b644d57c8debe075775b19011985b6342adfb430e7011456d12e

This issue reports such inconsistency and the issue unfortunately did not get attention. The reporter also asks to have sha256 of the actual image. However, the Kubernetes developers regard this as an CRI issue. Currently we cannot tell exactly which image is used.

I figured out a workaround, by getting on the node and dump the image on the node:

$ sudo ctr -n k8s.io image list

$ sudo ctr -n k8s.io image export /tmp/x.tar docker.io/digihunch/colorapp@sha256:0fa335fdbcc3b644d57c8debe075775b19011985b6342adfb430e7011456d12e

In the export tar file review the manifest.json file which contains layer digests. We should find these layer digests match those of the platform-specific image’s.

Utilities

Since we can ensure that Pod running on a node can always pull the correct platform-specific image, we do not need to worry about Helm chart. We just need to make sure our container registry references an index digest that points to images of multiple architecture. For the same reason, we do not need to worry about pod autoscaling.

When it comes to node autoscaling, all node should support have kubernetes.io/arch and kubernetes.io/os labels (e.g. Karpenter). However, we generally prefer to expand the arm64 node group since it is cheaper. With cluster autoscaler, we can use priority based expander. With Karpenter, we can set weight so that the provisioner for arm64 node group carries higher weight.

Scheduling

With multi-arch image, the container runtime will pick up the right version of image. From deployment perspective, we do not worry about the difference between nodes in CPU architectures. However, in some use cases, we still want to schedule certain Pods to nodes with one CPU architecture over the other. I call these platform-specific workload.

We mainly needs to control scheduling behaviour. There are two mechanisms, node affinity, and taints & tolerations.

Node Affinity is based on node labeling. From the well-known labels, annotations and taints, all Kubernetes distribution should label their nodes with the kubernetes.io/arch and kubernetes.io/os labels. The value for arch is either arm64 or amd64. When we add a node affinity of requiredDuringSchedulingIgnoredDuringExecution type to Pods, scheduler takes matchExpressions under nodeSelectorTerms into consideration, when placing Pods to Nodes.

When a Pod has lots of nodeSelectorTerms, it can be brain twisting to sort through the logic. In that case we can use Taints and Tolerations. The idea is that once we taint a node, the scheduler will not schedule any Pod to the Node, unless the Pod has a matching Toleration.

In this post, the author customized the bootstrap script so the node provisioning process automatically taints arm64 nodes with arch=arm64:NoSchedule. Otherwise, we can manually taint a node:

$ kubectl get no -o wide # and check KERNEL-VERSION column, taint the ones with aarch64

$ kubectl taint nodes ip-147-207-3-164.us-west-2.compute.internal arch=arm64:NoSchedule

This can be a very useful technique when you’re not sure if every workload image are capable of multi-arch, and you want to avoid scheduling any Pods without tolerations on the arm64 nodes. A Pod cannot get scheduled on those nodes until you confirm their container images, and add corresponding tolerations.

Summary

Given the power efficiency, a lot of workload will gradually migrate to arm64 architecture. However, software will take a while to get ready. For example, hyperkit has not supported M1 processor and I still cannot use it on newer MacOS for Minikube. Hybrid architecture is here to stay and we need to have an end-to-end examination of our supply chain.